Project Background

UCL MotionInput, represents a pioneering suite of touchless computing technologies developed through collaborative

efforts between UCL Computer Science students and industry leaders such as Intel, Microsoft, IBM, Google, NHS

(including Great Ormond Street Hospital for Children's DRIVE unit), and the UCLH Institute for Child Health.

Motion Input V1, released in January 2021 and is currently 3rd generation development. The software package enables

touchless computer interactions spanning a wide spectrum of human movement, leveraging nothing more than a standard

webcam and PC. Thereby it acts as a replacement to traditional input methods like keyboards, mice, or joypads.

By detecting movements of hands, head, eyes, mouth, and even full-body joints, Motion Input offers an intuitive and

accessible means of interfacing with digital devices. However, our focus is solely on the eye module, as showcased

on the MI3.2 website by Microsoft. Serving as part two of Facial Navigation, this existing eye module is designed to

detect the direction of eye gaze, enabling users to control the movement direction of the mouse cursor effortlessly.

It is executed in a similar manner to V3.2’s nose module (part one of Facial Navigation) that aims for effective

computer control akin to a virtual joystick, with the central axis situated around the eyes instead of the nostrils.

Additionally, it incorporates facial switches and speech command recognition to enable reliable event triggering.

As a team we will now take lead on refining and advancing the eye module from its V3.2 for its next V3.4 release where

it will take on its own innovative form as an EyeGaze Navigator. Our primary stakeholders assisting us on this undertaking

being: Prof Dean Mohamedally, Dr Atia Raiq Dr Mick Donegan/Harry Nelson, Jarnail Chudge and Wanda Gregory, alongside those

at special affect and occupational therapists at Richard Cloudesley School.

Core Objectives

Through an initial meeting with our IXN project mentor and reviewing our initial project brief, we established a set of core project aims that are key for a successful performance of the EyeGaze Navigator. We defined these before doing research on the existing MI eye gaze repo and code base.

Eye Detection Algorithm

Central to our system is the implementation of an advanced eye detection algorithm. This algorithm is the linchpin, allowing our software to accurately identify the user's eyes and initiate the gaze tracking process.

Gaze Estimation

With the eyes detected, our next step is to develop precise algorithms for gaze estimation. This technology predicts the user's gaze direction, providing the necessary data to interpret intent and focus within the digital environment.

Cursor Control

Utilizing the gaze estimation, we achieve intuitive cursor control. This function translates the user's gaze into cursor movements across the screen, offering a seamless interaction that mirrors natural eye movement.

Click Trigger

An essential component of our system is the click trigger mechanism. This feature allows users to execute clicks based on specific eye gestures or dwell times, enabling complete mouse functionality without the need for traditional input devices.

Requirement Gathering

To ensure the comprehensive development of our eye gaze project, we implemented various methods to gather

essential requirements, engaging with experts and stakeholders to refine our project’s objectives and design.

One of our initial steps was meeting with our IXN project mentor, which provided us with crucial clarification

on our project brief. This guidance was instrumental in outlining the primary goals and expectations for our

project, ensuring we remained aligned with the broader objectives set forth by our academic and research community.

Further enriching our understanding, we had the opportunity to meet with Anelia Gaydardzhieva, the head of operations

at Motion Input Games. During this meeting, we gained valuable insights into the existing MotionInput v3.2 repository.

Anelia provided an in-depth overview of the general structure, how the eye module integrates within it, and identified

key areas for improvement. This discussion was pivotal in highlighting the module's weak points, offering us a clear

direction on where our efforts could deliver the most impact.

A visit to SpecialEffect, a charity known for their work in customizing gaming technologies for individuals with

disabilities, proved to be exceptionally enlightening. Meeting with Mick Donegan and the entire team allowed us to

understand the real-world application of eye-tracking software and the significant costs associated with providing these

technologies to users. This encounter helped us grasp the financial constraints that could limit the reach of our project,

emphasizing the need for cost-effective solutions.

Our interaction with Harry Nelson, a tech specialist at SpecialEffect, was particularly beneficial. Harry reviewed our

initial set of requirements, offering feedback on their alignment with user needs and overall effectiveness. His advice on

prioritizing accuracy over aesthetic elements like interfaces and icons during the setup process was invaluable. This

feedback encouraged us to focus on functionality that directly benefits the user, such as precision in tracking and ease of use.

Additionally, our visits to Richard Cloudesley School were instrumental in understanding the user's perspective more deeply.

Conversations with occupational therapists allowed us to comprehend the challenges faced by our target user base. By building

personas based on these interactions, we were able to keep user needs at the forefront of our development process, ensuring that

our project objectives were closely aligned with what users desired most.

Finally, to ensure alignment with our requirements and satisfaction of our stakeholders, we meticulously devised a comprehensive

strategy consisting of five key methodologies to guide our project's progression. By integrating the following methods with our

existing efforts, we can ensure a well-rounded and user-cantered approach, ultimately leading to a more effective and impactful

eye gaze solution.

- User Surveys and Questionnaires: Distribute surveys to potential users and stakeholders to gather quantitative data on their needs, preferences, and pain points.

- Prototype Testing: Develop a working prototype of the eye gaze system and conduct usability testing sessions with students at Richard Cloudesley School. Feedback from these sessions can highlight areas of improvement and refine the system’s design.

- Focus Groups: Conduct focus group discussions with potential users, including the occupational therapist that work with students with disabilities, to explore their experiences with existing technologies and gather suggestions for new features.

- Competitive Analysis: Analyse existing eye gaze and motion input technologies to identify gaps in the market and opportunities for innovation.

- Expert Panels: Organize discussions with experts in assistive technologies, user experience design, and disability rights to ensure the project meets technical standards and addresses the nuanced needs of all users.

User Interviews

To understand user needs, we conducted semi-structured interviews with occupational therapists at Richard Cloudesley School and other potential users. This approach allowed us to ask consistent questions while adapting to delve deeper based on responses, offering room for exploratory dialogue based on participants' feedback.

-

Q1: If you have ever used an eye tracker before, what are your opinions on them?

- “Yes, the first I found it very hard and confusing. But now I just needed practise.”

- “No, it’s not on my laptop.”

-

Q2: What uses do you plan on using an EyeGaze Navigator for?

- “I really want to be able to play games by myself. Usually, my brothers have to help me use the Xbox or set up the game on my computer for me.”

- “I like watching YouTube, so I just want to be able to quickly pick what I want to watch next.”

- “I like games and I use my laptop to get schoolwork done so it can help me do that.”

-

Q3: As an occupational therapist, what features, or design elements should we steer clear of in our software to ensure optimal ease of use for individuals with conditions like cerebral palsy?

- "Incorporate a straightforward, guided calibration process that doesn't require precise movements."

- "Limit the number of steps needed to start or modify settings, making it easier for users with cognitive challenges."

- “Avoid small buttons, or anything you need the user to view or read make sure it is bright and bold.”

Personas

Personas are essential in our project, especially since we're developing an eye gaze navigator. Each persona represents a distinct user profile. These personas help us understand the unique needs and motivations of our users, enabling us to tailor our design to better serve them. Additionally, personas guide us in prioritizing our focus by highlighting assumptions about user behaviour and the tasks they need to perform with our eye gaze navigator. Ultimately, personas play a crucial role in ensuring that our project meets the diverse needs of our user base.

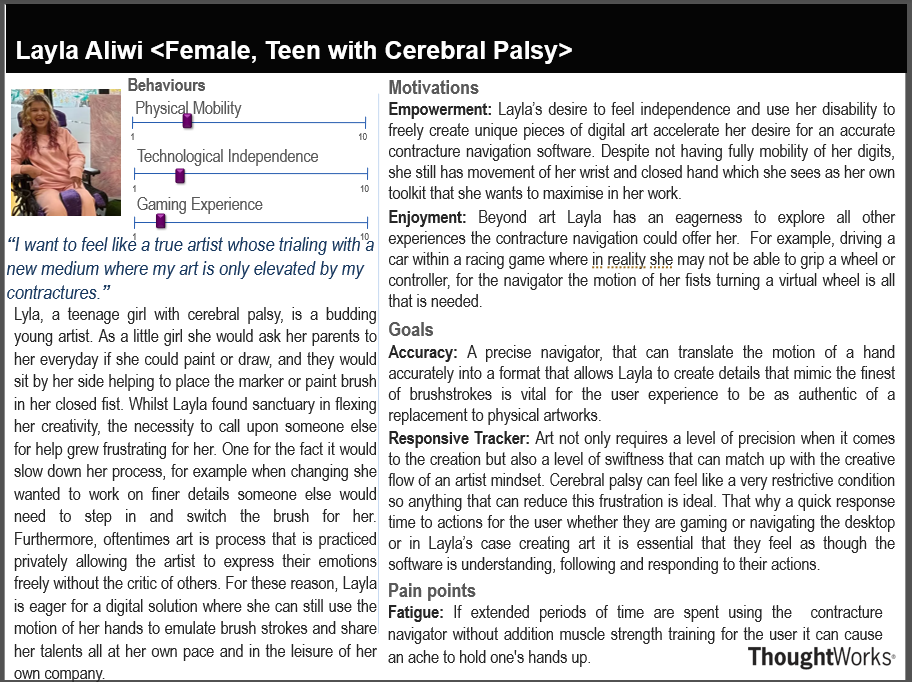

Peronas 1

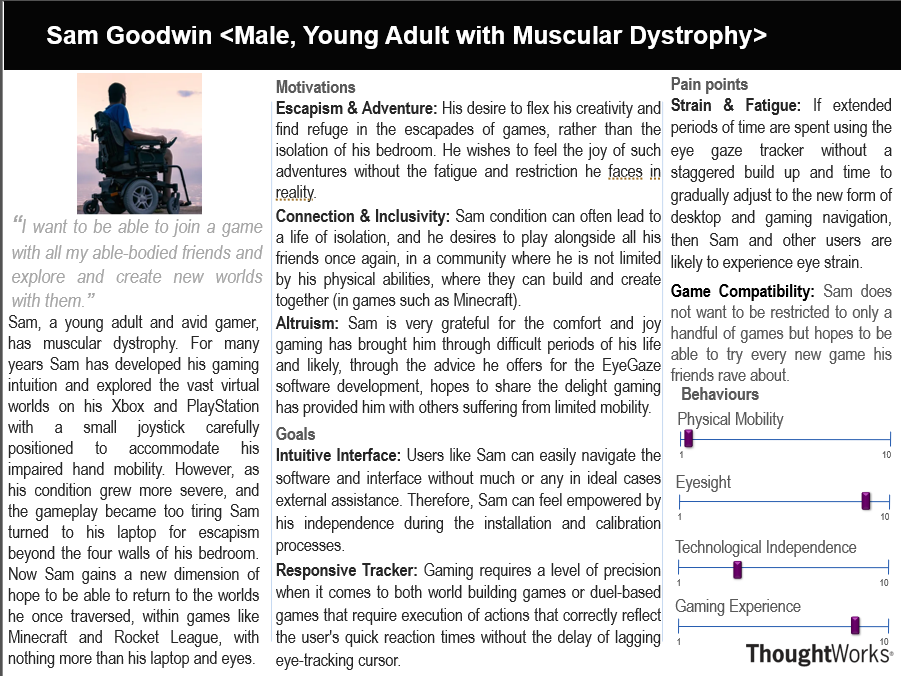

Peronas 2

Non-Functional Requirements

After analysing the MotionInput v2 codebase, and discussions with our clients, we made a set of final requirements at the start of our project development phase, which focused on a complete re-engineer of the old codebase:

- Usability: Beyond being intuitive, the program should be user-friendly and easy to navigate for individuals with varying levels of tech-savviness and abilities.

- Accessibility: The software should accommodate users with different needs and abilities, including those with physical or cognitive impairments, ensuring that everyone can use the technology effectively.

- Reliability: The eye-gaze tracking should perform consistently under different conditions and use cases, maintaining accuracy and responsiveness to ensure effective mouse control.

- Performance: The system should have minimal lag between the user's eye movements and the cursor response to ensure a seamless and efficient user experience.

- Scalability: The software should be capable of supporting a range of different hardware setups, screen resolutions, and user environments, ensuring consistent functionality across diverse configurations.

- Security: User data, particularly sensitive inputs captured by the eye tracker, should be securely managed and protected against unauthorized access or breaches.

- Privacy: The program must ensure that personal data, especially biometric data from eye tracking, is collected, stored, and processed in compliance with privacy laws and standards.

- Customizability: Users should have the option to adjust settings, such as calibration sensitivity, to suit their individual preferences and needs, enhancing the overall user experience.

- Interoperability: The software should be compatible with various operating systems and work seamlessly with other applications or assistive technologies.

- Maintainability: The code should be well-documented, structured, and testable, facilitating easy updates, bug fixes, and feature enhancements.

MoSCoW Requiremnts

*short desscription*

-

01 Must Have

- Indicator to direct user into ideal position for EyeGaze navigator use

- Increased calibration steps from current central one point calibration

- Enable navigation of office apps and browser apps using EyeGaze and Dwell features

- De-noise function to reduce cursor jumpiness and inaccuracy

- Clear documentation

-

02Should Have

- In the case of involuntary head movement, and return to position, the navigator should remain usable.

- Pause/Rest button for use to temporarily deactivate Eyegaze cursor control.

-

03 Could Have

- Implement an mfc to allow for user customisation.

- Allow user to select between shapes and colouring that will best guide them through calibration

- Allow user to adjust cursor size to their preference.

- Migration of our solution into existing Motion Input’s EyeGaze

- Be integrated with other Motion Input modules such as speech commands

-

03 Won't Have