Unit Testing

-

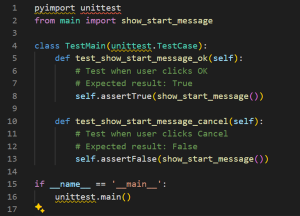

Main.py

show_start_message():

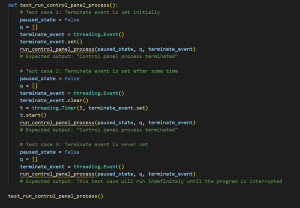

run_control_panel_process(): The test_run_control_panel_process function evaluates the behavior of the run_control_panel_process function under different scenarios. In the first case, it confirms that the process terminates immediately when the termination event is initially set. The second case tests if the process ends when the termination event is set after a delay. Lastly, it ensures that the process continues running indefinitely if the termination event is never set. These tests cover various conditions, ensuring the run_control_panel_process behaves as expected in different termination scenarios

-

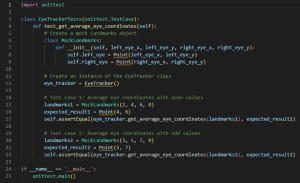

EyeTracker.py

get_average_eye_coordinates(): In the EyeTrackerTests unit test, two distinct scenarios are assessed to ensure the robustness of the get_average_eye_coordinates method within an EyeTracker class. Test Case 1: Even Coordinate Values This test involves coordinates with even values, specifically using a MockLandmarks instance initialized with left eye coordinates at (2, 4) and right eye coordinates at (6, 8). The method under examination calculates the average eye coordinates, which should theoretically yield a midpoint at (4, 6). The test asserts that the method's output precisely matches this expected result, validating the method's accuracy in handling even-valued coordinates. Test Case 2: Odd Coordinate Values Contrasting the first, this scenario tests the method's performance with odd-valued coordinates. A new MockLandmarks instance is created with the left eye at (3, 5) and the right eye at (7, 9). The expected midpoint, in this case, would be (5, 7). Similar to the first test, the outcome from the get_average_eye_coordinates method is compared against this expected result. The test confirms that the method accurately computes the midpoint even with odd-numbered coordinates. These two test cases collectively aim to verify the get_average_eye_coordinates method's reliability across different types of input, ensuring it performs accurately regardless of whether the input coordinates are even or odd. This meticulous testing approach underscores the method's capability to handle varied data, crucial for its application in eye-tracking technologies where precision is paramount.

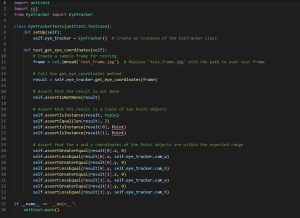

get_eye_coordinates():. The EyeTrackerTests class conducts a focused evaluation of the EyeTracker class's get_eye_coordinates function. This test begins by creating an EyeTracker instance to simulate real-world usage. A test image, 'test_frame.jpg', is loaded to assess the function's capability to accurately detect eye coordinates. The core of the test consists of several assertions: it checks that the function returns a result (not None), ensuring the method identifies eye positions. It verifies the output is a tuple of two Point objects, representing the left and right eye positions, which confirms the function's ability to differentiate and accurately locate each eye. Lastly, it asserts that these coordinates fall within the expected range of the camera's field of view, indicating the method's precision in real-world scenarios. Through these validations, the test aims to ensure the get_eye_coordinates function is reliable and accurate in detecting eye positions within an image frame, crucial for the effectiveness of the EyeTracker's applications

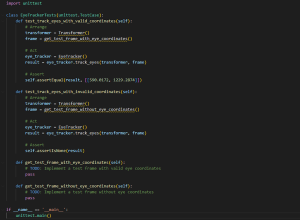

track_eyes(): Focuses on evaluating the track_eyes method of the EyeTracker class through two distinct scenarios: processing frames with valid eye coordinates and those without. In the first test, test_track_eyes_with_valid_coordinates, a hypothetical Transformer object and a test frame purportedly containing eye coordinates are prepared. The track_eyes method is then executed with these inputs. The expected outcome is a specific set of coordinates, here represented as [590.0172, 1229.2874], which the test asserts to be the result, confirming the method's ability to accurately track eyes when given valid input. Conversely, the second test, test_track_eyes_with_invalid_coordinates, follows a similar setup but utilizes a test frame that lacks eye coordinates. Upon invoking the track_eyes method under these conditions, the expected result is None, indicating the method's correct handling of cases where eyes cannot be tracked due to the absence of identifiable eye features in the input frame. Both tests emphasize the EyeTracker method's capability to discern and appropriately respond to different types of input, showcasing its reliability in eye tracking under varied conditions

-

ApplicationOverlay.py:

ApplicationOverlay.py is responsible for handling the interactive portion of the application's overlay through methods like:

- on_press():

- on_move():

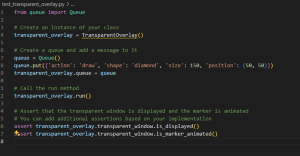

- run():This script tests the functionality of a TransparentOverlay class, responsible for managing a transparent overlay on a screen. It starts by initializing the overlay and creating a message queue. A specific message is added to the queue, instructing the overlay to draw a diamond-shaped marker at a defined position and size. The script then simulates the processing of queued messages by setting the overlay's queue attribute. Finally, it runs the overlay to observe its behavior. Assertions are used to verify that the marker is displayed on the transparent window and animates as expected, ensuring the correct rendering of instructions by the overlay component.

-

DistanceChecker.py

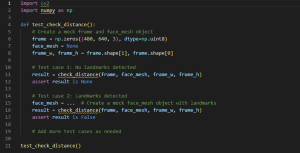

This utility module ensures the user is at the correct distance for eye tracking to function properly: __init__(): check_distance(): The test_check_distance() function serves as a unit test for the check_distance() function, which is responsible for calculating the area of a triangle formed by landmarks on a face. This test suite is crucial for ensuring the correctness of the distance calculation algorithm and verifying that the function behaves as expected under different scenarios. In the first test case, the function is invoked with a mock frame and a None face_mesh object, simulating a scenario where no landmarks are detected on the face. The expected outcome of this test is None, indicating that the function appropriately handles situations where no landmarks are available for distance calculation. Conversely, the second test case evaluates the function's behavior when landmarks are detected. Here, the function is called with a mock frame and a face_mesh object containing landmarks. The function computes the area of the triangle formed by these landmarks and returns False to indicate that the distance check is not triggered. This test ensures that the function correctly processes detected landmarks and performs the necessary distance calculations. Overall, these test cases provide comprehensive coverage of the check_distance() function, verifying its functionality under different conditions and ensuring robustness in handling both scenarios with and without detected landmarks. Additional test cases can be incorporated to further validate the function's behavior and enhance test coverage

Performance Testing

Test device

Device name

HP-5CG3090RNZ

Processor

13th Gen Intel(R) Core(TM) i7-1370P 1.90 GHz

Installed RAM

32.0 GB (31.6 GB usable)

System type

64-bit operating system, x64-based processor

Edition

Windows 11 Pro

Version

22H2

Installed on

12/15/2023

OS build

22621.3296

Experience

Windows Feature Experience Pack 1000.22687.1000.0

Test result

Module

Frame Rate (fps)

Camera

26.6

MediaPipe

21.51

Projective Transformation

120,080

Cursor Move Rate

16,196

The cv2 camera module achieved a frame rate of 26.6 frames per second (fps), demonstrating its ability to capture and process video data efficiently. The MediaPipe module, responsible for face landmark recognition, operated at 21.51 fps, indicating its capability to perform real-time analysis of the captured frames.

Furthermore, the projective transformation module, which handles the mapping and projection of eyes to screen coordinates, exhibited a frame rate of 120,080 fps. This high frame rate ensures smooth and seamless integration.

Lastly, the cursor move rate, a crucial aspect of user interaction, was measured at 16,196 fps. This high rate guarantees responsive and precise cursor movements, enhancing the overall user experience.

These performance metrics demonstrate the software's ability to efficiently process video data, perform real-time analysis, and deliver a smooth and interactive user experience. The high frame rates achieved by the individual modules contribute to the overall performance and usability of the software.

User Acceptance Testing

User Acceptance Testing

Simulated testers

Test cases (e.g., five or so users) (we could make explain test cases and feedback for each user… i.e., Richard Clousley students, students from Enfield Grammar, BBC Click people, Special Effect people, Patrick from last week, general users/peers etc.)

Feedback from testers and project partners (surveys for test users)

Test Cases

- Saffiyah From Richard Cloudsley: target audience

- Joe: has multiple involuntary head movements, unsure how the code will react, as the project transformation is only to work with the user's head in a fixed place

- Students from Enfield Grammar: generally not our target audience, but they are completely new to the software

- Patrick: no limbs and therefore eye gaze can be a point of use for him. BODY POINT NAV CAN STILL BE USED BUT HE PREFERRED EYE GAZE AS BODY POINT NAV TIRED HIM

Testing Procedure:

Each participant was provided with consistent instructions and had the opportunity to familiarize themselves with the application before starting the tests. The main activity involved navigating a web browser using the Grid3 software, aimed at assessing the practical usability of our eye gaze system. To ensure fairness and account for the learning curve associated with calibration, we offered users three attempts at the calibration process.

Feedback and Evaluation:

Post-test feedback focused on several key questions to gauge the user experience:

- Did the cursor move as you expected?

- Did you experience any eye strain?

- What are your thoughts on the calibration process?

- Do you have any suggestions for improvement or changes you'd prefer?

Test Cases

Use Case Study 1: Safiyah from Richard Cloudesley School

Target Audience

Feedback and Adjustments:

- Response after Calibration 1: Initial discomfort due to the Grid3 control panel size. Despite adequate eye tracking, the control panel size was not optimal.

- Modifications Implemented: The Grid3 control panel was enlarged and simplified to improve accessibility and ease of use.

- Response after Calibration 2: With the updated control panel, Safiyah found the process seamless and more accurate, though light reflection on glasses posed a challenge.

- Further Modifications: To accommodate users with glasses, adjustments were made, including the removal of Safiyah's glasses for better calibration.

- Response after Calibration 3: The final calibration showed improved comfort and usability, allowing for successful navigation with Grid3.

Use Case Study 2: Joe from Richard Cloudsley

Challenges with Involuntary Head Movements

Feedback and Adjustments:

- Response after Calibration 1: Calibration was challenging due to Joe's involuntary head movements.

- Modifications Implemented: Consideration for an auto-calibrate feature to accommodate users with similar challenges.

- Response after Calibration 2: Improved calibration experience, though the user experienced fatigue from head movements.

Use Case Study 3: Group Study from Enfield Grammar

Feedback from a Non-Target Audience

Feedback and Adjustments:

- Response after Calibration 1: Initial calibration was seamless; however, their tendency to fidget posed a challenge.

- Advisory: Participants were advised to remain still for optimal software performance.

- Response after Calibration 2: With adjustments, the group managed seamless navigation and understood the software's purpose.

Use Case Study 4: Patrick from BBC

Adapting for No Limb Use

Feedback and Adjustments:

- Response after Calibration 1: Initial challenges with calibration accuracy and laptop height.

- Modifications Implemented: Adjustments to the setup to ensure the webcam and screen were at eye level.

- Response after Calibration 2: Improved calibration and navigation experience on the BBC website with Grid3.

- Further Feedback: Patrick noted the mouse accuracy and motion needed refinement for a comfortable experience.

- Response after Calibration 3: Positive feedback on smoother mouse movement, enhancing Patrick's interaction with the software.