We designed UnitPylot’s user interface with the goal of making testing as intuitive and seamless

as possible within the

VS Code environment. From the beginning, we aimed to reduce friction by integrating key visual

elements (the dashboard,

test status indicators, and inline coverage highlights) directly into the editor. Our intention

was to ensure that

developers wouldn’t have to context-switch or leave their workspace to understand their test

suite’s health. The

interface prioritises clarity: we chose minimalist graphs, concise tooltips, and clean layouts to

deliver relevant data

at a glance.

The UI went through multiple iterative improvements driven by user testing. For instance, early

users noted that the

dashboard lacked explanatory legends and that certain coverage indicators weren’t fully intuitive.

In response, we

incorporated hover tooltips, added labels to graphs, and made the inline suggestions more

contextually descriptive. This

iterative refinement process demonstrated a strong feedback loop, where the team worked

collaboratively to ensure every

visual element served a functional purpose. We also improved interaction design by refining

command placement in the

context menu and palette to reduce friction.

Future improvements could include adaptive UI elements based on user preferences or test suite

complexity to further

personalise the experience.

We built UnitPylot with a clear focus on solving real pain points in Python testing. Features like

test execution, code

coverage visualisation, AI suggestions, and snapshot tracking were all grounded in feedback from

developers we spoke to

during our research phase. Rather than being a passive test viewer, UnitPylot actively helps

developers understand and

improve their testing through both automation and insight.

Each major feature went through rounds of refinement. For instance, with the Fix Failing Tests

command, we slowly

expanded the logic to handle real failure output. Our Improve Coverage and Optimise Test Speed &

Memory commands were

developed using hand-crafted examples and edge cases, which we used to evaluate the accuracy of

the AI’s suggestions.

Throughout, we tried to balance intelligent automation with developer control: suggestions are

non-destructive, clearly

explained, and easy to accept or reject.

However, we also encountered functional limitations, especially when dealing with less

conventional Python project

structures. Some files weren’t detected properly if they didn’t follow common naming conventions,

and AI suggestions

occasionally struggled with complex code contexts. While these issues didn’t block core

functionality, they highlighted

the importance of refining our parsing logic and improving the prompts we send to the LLM.

Going forward, we plan to improve our static analysis and introduce more advanced filters to make

the AI’s output

smarter and more context-aware.

Ensuring stability was a major focus throughout the project. Because testing tools are only useful

when reliable, we

aimed to build a system that handled errors gracefully and provided meaningful feedback when

something went wrong. We

wrote unit tests for core modules like the TestRunner, history tracker, and report generator.

These gave us confidence

that the underlying logic was robust even as we introduced new features.

We manually tested the extension across various environments and scenarios—intentionally breaking

tests and running the

extension on both macOS and Windows to confirm that it could handle errors without crashing.

Future improvements could include adding environment checks or fallbacks and better logging to

help users self-diagnose

issues.

Overall, the extension is stable in expected conditions, but we want to make it more

resilient to variability in user setups.

Efficiency was a driving factor behind many of our design choices. Developers often hesitate to

write or run tests

because of time constraints, so we wanted UnitPylot to remove friction from that process. The most

significant

efficiency gain came from implementing function-level hashing. Instead of running the entire test

suite every time, we

can now identify which functions changed and rerun only the relevant tests. In our testing, this

drastically reduced

test execution times, especially in medium-to-large projects.

We also focused on UI responsiveness. Since much of our logic (like hashing and parsing coverage)

runs in the

background, we used VS Code’s asynchronous APIs to avoid blocking the editor. Most operations

complete quickly, and even

the more expensive tasks like snapshot creation or graph rendering happen without interrupting the

coding flow. We also

allow users to configure how often the extension updates test data, giving them more control over

performance vs.

freshness.

From the outset, we wanted UnitPylot to be compatible with any developer using pytest, regardless

of operating system or

environment. We tested the extension on Windows, macOS, and Linux, and across several versions of

Python to ensure core

features like test execution, coverage parsing, and dashboard rendering worked reliably

everywhere.

To give users more flexibility, we also allowed integration with custom LLM endpoints. This means

users can configure a

locally hosted or third-party model in place of the default Language Model API. While this feature

was useful in

principle, it introduced new challenges. For instance, not all LLMs return output in the strict

JSON format our

extension expects, which occasionally caused parsing errors or failed suggestions.

UnitPylot is tailored specifically for brownfield Python projects, acknowledging the real-world

complexity of legacy

codebases. It is designed to integrate smoothly into existing workflows without requiring project

restructuring. By

focusing on brownfield compatibility and a leading test framework, the extension offers dependable

integration in common

development environments.

Future plans include broadening compatibility with additional test frameworks, to further meet the

needs of diverse

teams and organisations.

We kept our code modular by splitting responsibilities into separate files—test logic, file

hashing, coverage parsing,

history management, and AI request handling were all clearly separated. This made it easier for us

to work in parallel

and avoid stepping on each other’s changes.

We followed consistent naming conventions and used TypeScript and Python typing wherever possible

to catch bugs before

runtime. Our GitHub workflow included linting, and every major feature was reviewed before

merging. Unit tests for the

TestRunner and history classes gave us confidence that small changes wouldn’t break critical

functionality.

Going forward, maintaining detailed changelogs, developer onboarding guides, and API docs will be

essential to scaling the project and onboarding contributors.

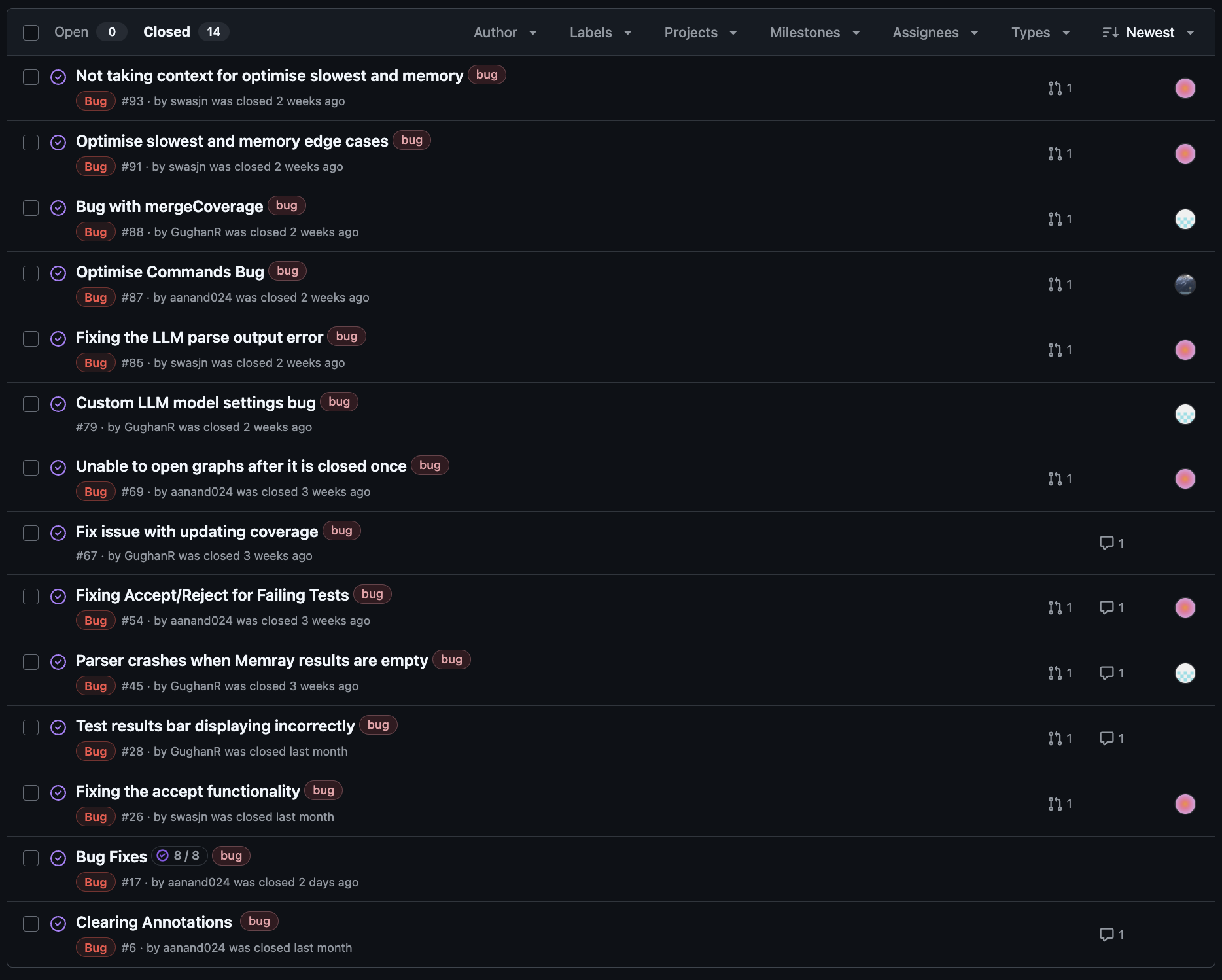

Managing the project as a student team required discipline and adaptability. We met regularly to

plan sprints, divide

tasks, and reassess priorities based on deadlines and feedback. We adopted Agile with a Kanban framework to prioritise tasks effectively, utilising GitHub's built-in "Projects" functionality.

Our GitHub project board tracked

tasks across categories

like core features, UI updates, bug fixes, and testing. This helped us visualise progress and stay

aligned, even when

team members had different schedules or workloads.

Client check-ins helped keep us focused. Feedback from Microsoft and peer developers guided many

decisions. We also used

GitHub Issues to track bugs and incorporate user feedback from demo sessions, which allowed us to

iterate quickly on

what mattered most.

That said, we did encounter challenges with scope creep, particularly around AI integration. Some

features took longer

than expected, which compressed time for testing and polish. In hindsight, we would benefit from

setting clearer

boundaries on experimental features and reserving buffer time at the end of each sprint.

Going forward, we want to adopt a more structured sprint retrospective process to reflect on what

worked and apply those lessons proactively.