Packages & APIs

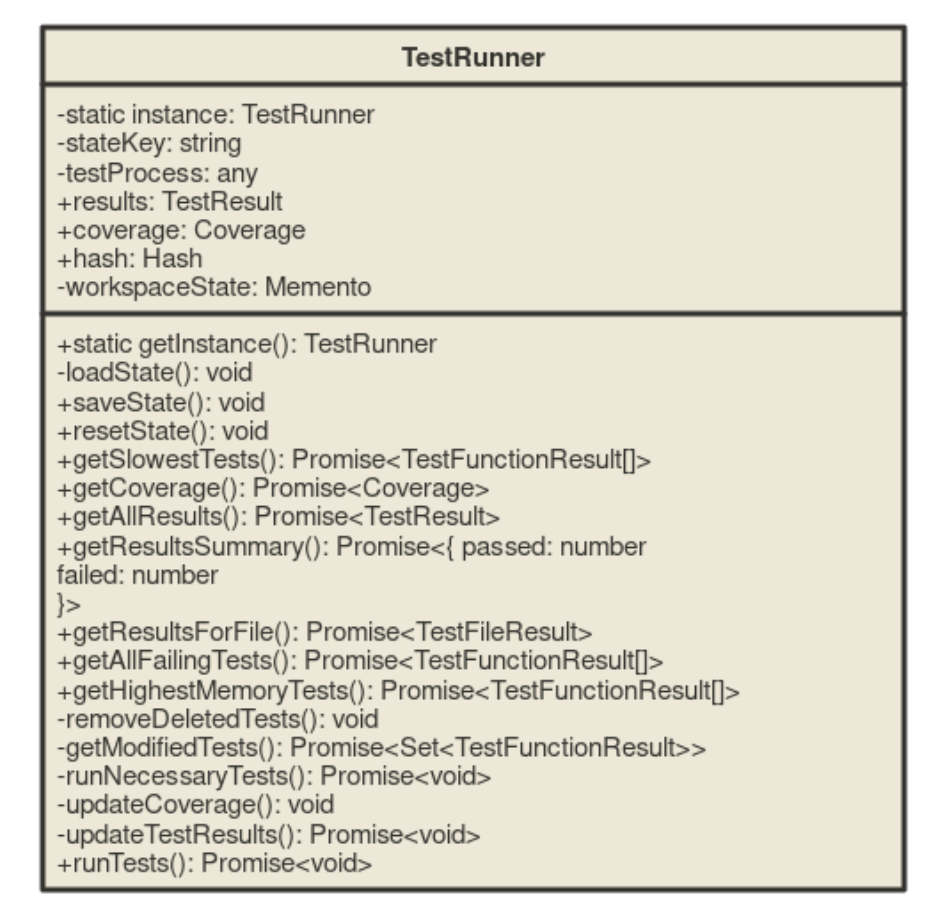

UnitPylot is developed as a VS Code extension using TypeScript for the frontend, Node.js for the backend, and Python for extracting test suite data.

The Node.js ecosystem provides core functionalities required for the extension, with modules that are used to interact with APIs, provide file system and path operations, and interact with SQLite databases. The key modules used are:

vscode: provides the APIs for extension interaction and Copilot integration with VS Code.fs: handles file system operations such as reading and writing onto workspace files.path: manages directory structures and retrieves file paths.sqlite3: facilitates database management for reading records generated by pytest-monitor.

vscode module, several APIs are utilised to implement core functionalities:

- Extension API: manages the activation, deactivation, and life cycle of the extension. Also handles the custom command management and registration.

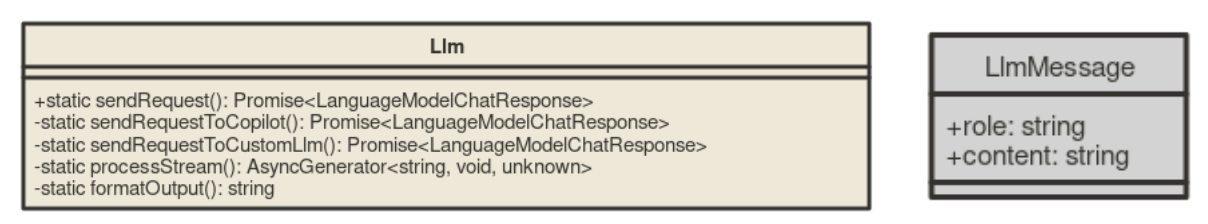

- Language Model API: facilitates the creation of specialised agents through interaction with a cloud-based LLM model (GitHub Copilot powered by GPT-4.0).

- TextEditor API: provides interaction with open code files, used for displaying AI-generated suggestions.

- Workspace API: allows access to user project structures and file management.

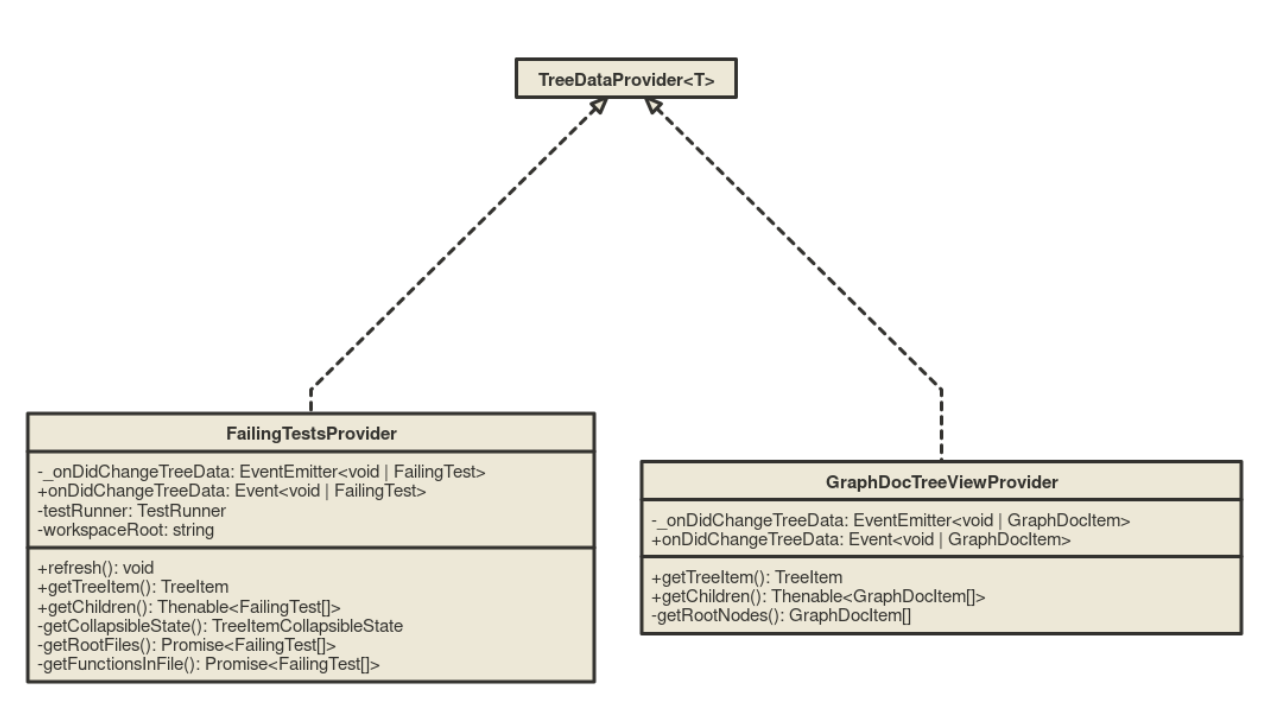

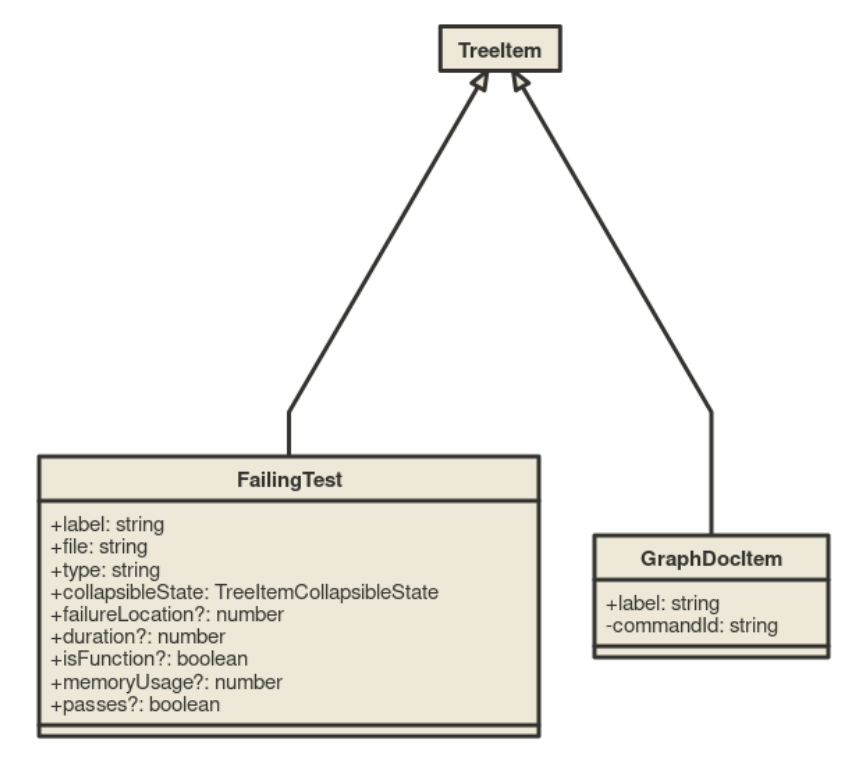

- Tree View API: implements hierarchical organisation, enabling the structured display of the test suite.

- Web View API: enables interactive UI elements, such as test history graphs.

- Chat API: allows the user to interact with the chat participant suggesting test optimisations.