Introduction

Project Background

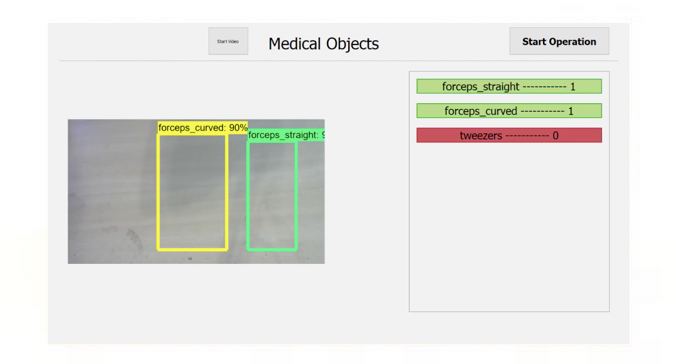

The objective of our project was to use Computer Visions for object detection in medicine. We decided on using computer visions to try and detect medical instruments in operating theatres to help aid the procedure. By identifying different medical instruments through object detection, the live status of objects in the operating theatre can be viewed allowing clarity for all the staff present. All the data gathered by the system can also be logged and later used to analyze operations.

To produce such a system however, it must be able to distinguish between different medical instruments by even the smallest of details in a wide range of environments. To do this we will need to train our own object detection models using images of our own medical instruments in various clinical scenarios. By testing the models, we will be able to discover the limitations of current computer visions technologies and determine whether such a system is feasible.

Client

Great Ormond Street Hospital (GOSH) partnerships with Microsoft allows them to collaborate on the development of artificial intelligence (AI) tools to transform child health. Areas of focus include machine learning, assisted decision making and the use of medical chatbots with an aim of providing care that is even safer, more effective and more personalised as well as an enhanced patient experience.

The proof of concept outputs will then be available for GOSH to scale and test with the aim of building solutions for the rest of the NHS and beyond. To research and evaluate these projects and a range of other new technologies, GOSH is creating a dedicated Digital Research, Informatics and Virtual Environments unit (DRIVE).

Project Goals

An operating theatre is a very particular and challenging environment where the objects are of similar colors and the scene contains a lot of clutter and occlusions. We hope to use object detection to identify and track different clinical instruments in different clinical environments reliably. The reason we think this is useful is to try and bring order to a very chaotic environment by aiding the identification of different instruments.

We hope to later on use the data collected by the system to see what instruments were used during specific surgeries. This will allow doctors in training to get access to real world information as to how to surgeons in the world operate specific surgeries, what instruments they use and for how long. This can be instrumental to the improving medical education.

Another use for the system is being able to see what tool set different surgeons prefer to use for each surgery. This allows for the surgical staff to be more prepared and have everything they need at hand. All staff will also have a better understanding on what is happening in the operation theatre at different periods of the surgery. By collecting all the data at the end of a surgery, different graphs can be produced which can give a clear and comprehensive look into the procedure for that specific surgery.

By the end of the project we hope to have a system which can detect instruments in different conditions such as different backgrounds and lighting conditions. We would like our system to give an account of what instruments are being used with incredibly high accuracy. Our task was also to see how plausible this system is for real world use.

Requirements Gathereing & Sealing

Initial setup

We spent the first week researching into the computer visions field and brainstorming ideas on the different paths in which the project could go. As we had not met up with our client yet and the project brief was not too extensive , we kept our sketches and ideas very brief but on a diverse range of themes . At the start of the second week we had our first meeting with our client.

During the meeting with the client it was made clear that although there was no specific project in mind, they wanted us to use computer vision in some way to aid the medical field . The client suggested two possible routes we could take our project:

- Using facial recognition to try help diagnose illnesses in children (one example given was as down syndrome)

- Using computer vision to try and detect medical instruments in operating theatres to help aid the procedures.

We had also initially discussed about using either the Microsoft Kinect to capture the live feed or just ordinary high-quality cameras. The Microsoft Kinect system has depth detection which we thought could help improve the accuracy of the object detection if we used multiple Kinects to make a mesh of the scene. The system however is old, and the video quality is quite low (640 x 480). Furthermore, there does not seem to be a highly developed object detection/tracking API especially with integrating multiple Kinects together, so we decided that we were probably better off using high quality cameras for the video stream instead.

Sealing requirements

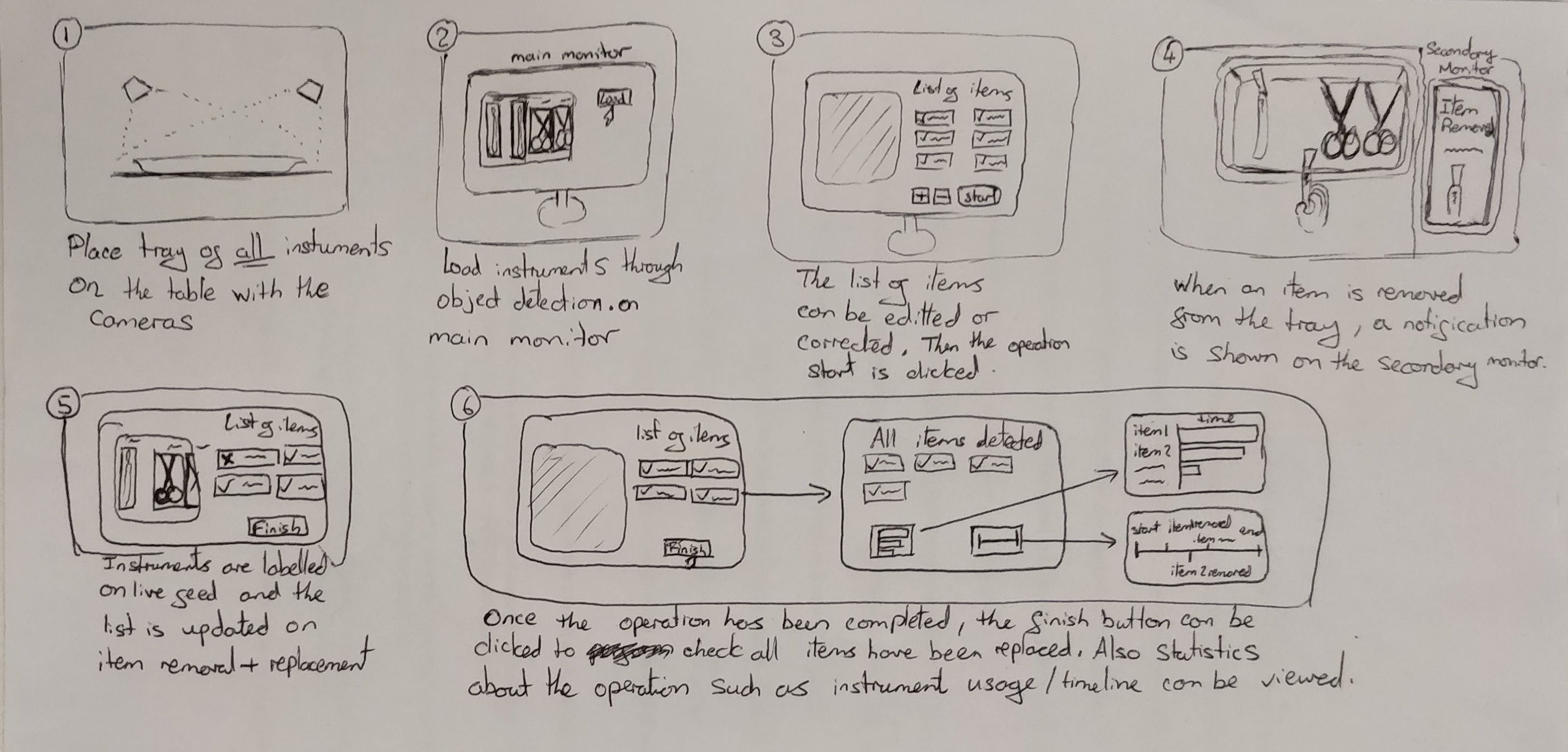

During our second meeting with the client we confirmed the project and discussed the requirements which resulted in the MoSCoW list. Meeting with the client and showing them our initial sketch designs allowed us to build on and enhance the interface and reach the final prototype which is displayed in the HCI presentation.

After talking with different users, we realised that we needed to add a no-login function to allow the doctor to start the program without the login system in order for the surgery to start as soon as possible. During this time the monitor will not assume any tools are being used and will only display the tools that it has seen. This can be accessed by pressing the GOSH button in the login page.

personas

We created these personas to help us understand the needs of different types of users.

Dr Harry Michael Yap

Dr Yap is a 32 year old full time general surgeon. He therefore has to deal with a large number of emergency surgeries which require a wide range of skills, experience and equipment. His goals in life include making sure that as many patients as possible that enter his care leave satisfied, always learning and gaining more skills and performing to the best of his ability to avoid mistakes. The thankfulness and smiles from his patients’ and their families is what keeps him motivated as it reminds him that he is making a difference to people's’ lives. In the weekends he likes to relax and recharge his energy for the week to come. He also enjoys going out in the evenings to drink with friends or fellow colleagues to catch up or talk about work. However he keeps wary of how much he spends on nights such these as he likes to keep to his budget.

Kate Johnston

Kate is a 40 year old scrub nurse. She has been working alongside doctors for the past 15 years. She is responsible for handling surgical instruments in the operation room.. Her favorite part of the role is interacting with patients and developing a bond with them. She likes spending time with family and children as well as solving crossword puzzles in her spare time. He main goal is to become head scrub nurse at the hospital and be able to branch out into the managerial role and try to use her years of experience to help her fellow coworkers.

Storyboard

Below we created a storyboard to show a typical setup and how the program could be used in an operational setting. It shows the process off loading up the program and the interactions the users could make and how the program will respond to the inputs.

moscow list

Must Have:

- To be able to detect and identify medical objects

- Experiment with different settings and obstructions to mimic clinical scenarios

- Simple interface to display live object status

- List of objects present

- List of objects removed

- Identify/label all the different objects in view on the interface

- After operation check all objects are accounted

Should Have:

- Customisation options of sets

- Two separate user interfaces

- Notifier when an object has been removed and what the instrument is

Could Have:

- End of operation object summary, Machine Learning

- Object timestamps and heat maps

Won't Have:

- No notification sounds as they can be distracting

- Data collation from multiple theatres

Use cases

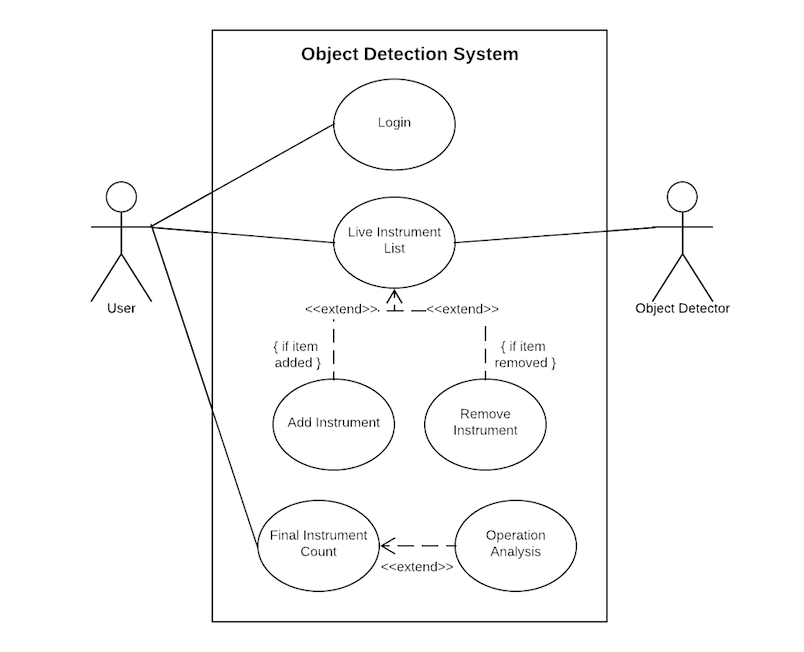

Use Case Diagram

Use Case List

Actors

- User

- Nurse

- Doctors

- Operating Technicians

- Object Detector

Use Case

- Login

- Live Instrument List

- Add instrument

- Remove Instrument

- Final Instrument Count

- Operation Analysis

Use Case Tables

| Use Case | Instrument List Page |

| ID | UC1 |

| Brief Description | Instrument removed from tray |

| Primary Actors | User |

| Secondary Actors | Object Detection System |

| Preconditions | User logged in to system |

| Main Flow |

- If count = 0, item display becomes red |

| Postconditions | None |

| Alternative Flows | ERROR |

| Use Case | Instrument List Page |

| ID | UC2 |

| Brief Description | Instrument added from tray |

| Primary Actors | User |

| Secondary Actors | Object Detection System |

| Preconditions | User logged in to system |

| Main Flow |

- create instrument if it does not exist - If count equaled 0 before increase, item display becomes green |

| Postconditions | None |

| Alternative Flows | ERROR |

| Use Case | Instrument List Page |

| ID | UC3 |

| Brief Description | Complete list of instruments used in the operation |

| Primary Actors | User |

| Secondary Actors | Local System |

| Preconditions | User logged in to system |

| Main Flow |

|

| Postconditions | None |

| Alternative Flows | ERROR |

| Use Case | Operation Analysis Page |

| ID | UC4 |

| Brief Description | Data analytics from data received from the operation |

| Primary Actors | User |

| Secondary Actors | Local System |

| Preconditions | User logged in to system |

| Main Flow |

|

| Postconditions | None |

| Alternative Flows | ERROR |

| Use Case | Login Page |

| ID | UC5 |

| Brief Description | System Login |

| Primary Actors | User |

| Secondary Actors | Local System |

| Preconditions | None |

| Main Flow |

- if infromation corrent then enter application - if infromation incorrect then error |

| Postconditions | None |

| Alternative Flows | ERROR |