TESTING STRAEGY

When planning a testing strategy the team decided to look at different testing levels that we could reach. We mainly looked at the three primary levels of testing, unit testing, integration testing and system testing, focusing on writing unit tests. With each team member responsible for testing their own portion of the project during development all the while constantly communicating with one another to make sure everyone is on track. The team also used test driven development (TDD) where possible in order to have a comprehenive testing suite.

The team decided to use a combination manual and automated testing in order to get a more comprehensive testing method in order to allow easier and safer expansion. The team decided to carry out two rounds of user tests in order to improve the system by having others test which you can read more about here.

Due to the fact that the system is written in Python, all tests are written in ‘Pytest’ with the help of Mocks. As an integral part of the system is located on the Azure VM many tests have to be run manually in order assure the system is working as it should. The main focus of the test is to check the logic of the system when adding and removing items from the lists, making sure they are all accounted for and pushed to the database properly. The tests documentation structure also follow the Pytest format.

In order to make sure the system was as readable as possible we decided to test its cyclomatic complexity using “radon” a python cyclomatic complexity calculator. By running radon we were able to confirm that all but 1 of the methods are in the A category (the lowest level of complexity) the other being classified as category B. The complete complexity breakdown can be found in the source code.

UNIT & INTEGRATION TESTING

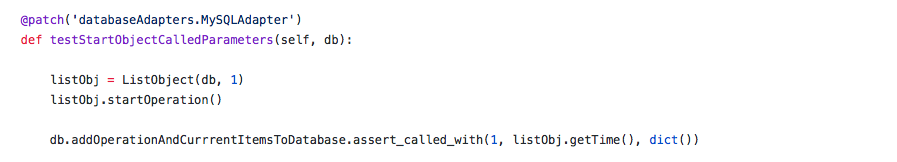

Writing unit and integration tests were all done in Python (specifically Pytest) as both tensorflow and PyQt are both python languages. The team decided to test the main ‘ListObject’ class as that was where most of the logic of our project is located. When writing tests the team tried to cover all possible scenarios that would make the code break. This was done using the TDD (Test Drive Development) method in order to make sure out tests covered as much as the code as possible.

All these tests were written with the help of mocks which allow us to test what functions have been called. Due to the fact that the system is heavily interwoven with the database the team decided to abstract all the database queries into one class ‘MySQLAdapter’. This allowed us to mock the database in order to have comprehensive tests for the system.

AUTOMATED TESTING

By running the system on pytest we are able to run tests on the system automatically which gave us certainly in the code that was written. This also allowed us to make sure no functionalities were broken when adding new functionalities as the previously written tests would break.

As mentioned before the tests were written in pytest with the help of the mocks library. Having mocks allowed us to tests different functionalities as we were able to mock certain functionalities ourselves allowing us to make a very detailed test suite.

USER ACCEPTANCE TESTING

Users

Test Cases & Results

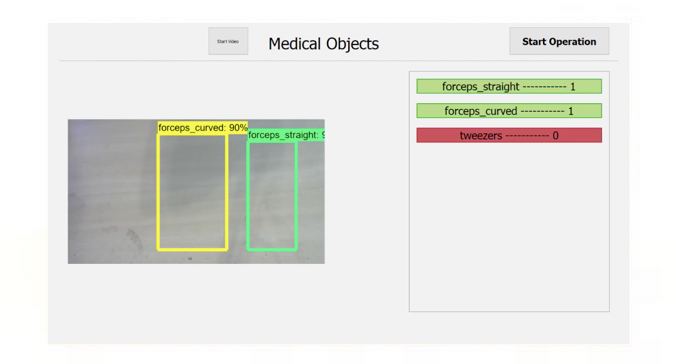

In order to test the application and understand with multiple users. There were two rounds in the user testing, one during the beginning of March and a second round in April in order to test the finalised system. This allowed the team to make necessary changes to the application from the feedback we received from the users. It’s important to note that the detection and user interface were tested separately in the first round of testing as integration had not yet happened yet.

When testing the application the users were not given login details at first in order to test out the emergency login system and its intuitiveness. The users were also asked to try and work with the system adding and removing objects from the tray to test out the detection.

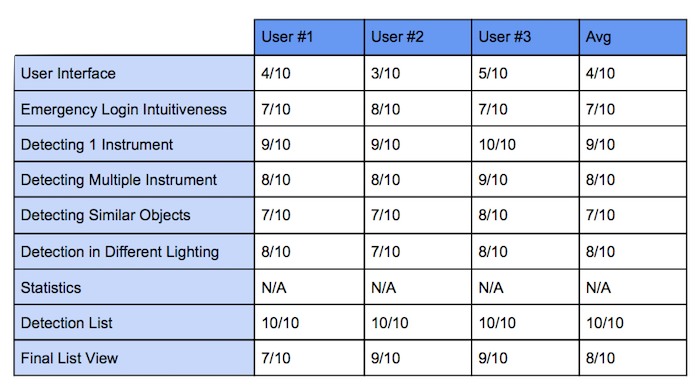

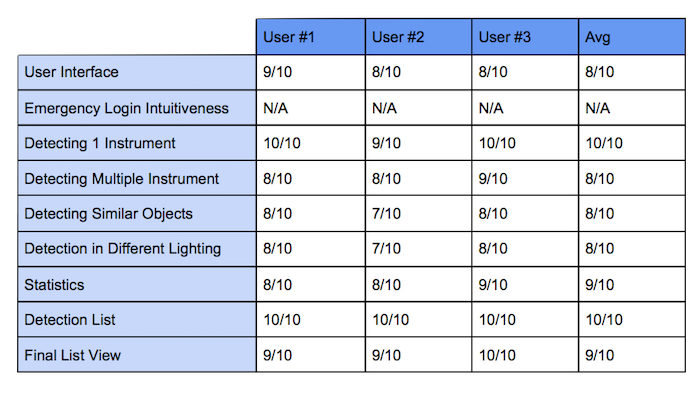

Below is a quintified response to the users experience to test cases:

ROUND 1 - MARCH

*It is important to note that the Statistics page had not yet been developed and therefore could not be scored.

From the tests carried out in March the team decided to change the user interface as it held the lowest average in the table. Due to these the results the user interface for the system was completely changed. The team also added more functionalities to the system such as having an interactive Final List page.

ROUND 2 - APRIL

*Emergency login cannot be tested as users were already aware of the functionality from the first round of testing.

As it it shown in the chart there were significant improvements especially in the user interface.