Abstract

This project presents an innovative advancement in assistive technology through MotionInput, a software solution designed to make computer and game accessibility feasible for individuals with disabilities, utilizing just a standard webcam. By eliminating the need for expensive and specialized hardware, MotionInput opens up a world of digital interaction to millions, offering seamless integration with popular games and applications. Our initiative encompasses two interconnected projects aimed at amplifying the impact and reach of MotionInput.

The first part focuses on increasing visibility and ease of access to various MotionInput-enabled applications, including but not limited to Minecraft, Rocket League, Tetris, and Batman, as well as tools like In-Airmultitouch Eye Gaze. We are developing a website portal generator that facilitates the rapid creation of dedicated websites. These portals will feature consistent design aesthetics, housing manuals and instructions tailored to each application, elucidating how MotionInput can be utilized for an enhanced gaming or computing experience.

The second part introduces a layer of personalization and user-centric design, allowing individuals to specify their interaction preferences through voice commands, such as saying Tetris with arm and leg movements or using a two-finger pose to drop items. Which will then later override the existing configuration files. This customization capability ensures that MotionInput not only broadens access but also adapts to the unique needs and comfort of each user, making digital engagement more intuitive and enjoyable.

Note 1: It's imperative for users and marker to recognize that our portfolio is bifurcated to address these two projects distinctly, so bare in mind that the function of the two project is completely different and only linked by bigger idea of accessibility and instruction for user to understand and play MotionInput. Most of the time each section is divide into two part for the two project. Our work underscores a commitment to inclusivity, aiming to transform the digital landscape into a space where everyone, regardless of physical abilities, can participate fully and joyously.

Note 2: As for our second part it is integrate alongside one of the final year Computer Science student who are working in MotionInput. However all the algorithm and implementation is fully discussed here in this portfolio.

Designing User Guidance Website and Publishing it

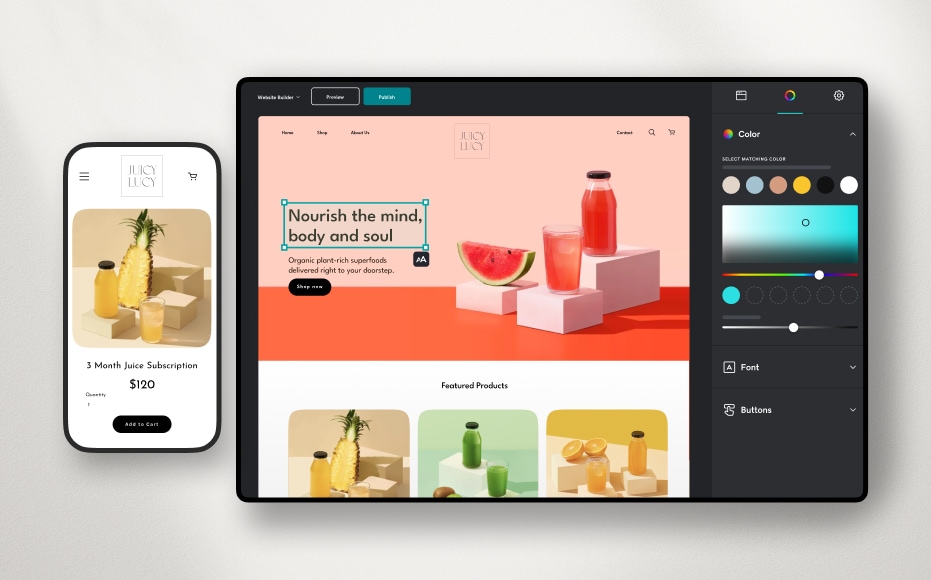

Our goal is to develop a generator template for a series of web presences for each of the MI34 project case studies. This involves creating user guides, demonstration videos, and a user registration system. A key aspect of our project is the integration of accessibility features like eyegaze and facial navigation, making our solutions inclusive and innovative. Additionally, we're coordinating with UCL to deploy apps across platforms like Steam, Epic Games, and the Windows Store.

- Form-liked Generator that creates JSON file to combine with the template

- Accessible Website Features

- Continuous Integration of Users Feedback

Our journey so far has been a blend of technical challenges and learning opportunities. Each week brings us closer to our goal, teaching us the value of collaboration, planning, and user-centric design. As we move forward, we anticipate delving deeper into the development phase, where our ideas will start taking a tangible form.

Creating Speech Recognition that Automated User Gameplay Preference

In this project, we develop scripts and specific language model within MotionInput to allow users to customize their body parts controls in different games using voice commands, enhancing accessibility options. Here's our approach: We modify existing configuration files within MotionInput to tailor how each game functions and which controls is map to which body part. To accurately recognize each spoken word and its intended action, we developed a new (Named Entity Recognition) model that identifies specific terms and phrases related to gaming and real-life actions.

- Modifying existing configuration files in MotionInput to adapt game functionality to user preferences. template

- By creating a new Language model to identify specific gaming and real-life action terms and phrases from user speech.

- This is being integrate alongside third-year student dissertation project (So it not our duty to deployed it)

Video

Here our 8 minute video demo

Matthew Web Portal

This is place where we showcase our website. Working alongside with Matthew Peniket a Master student who create a web portal for us to put our generate website on so it can be similar to catalogue of looking at different builds

Team 21

Introducing the team for Motion Input Web Portal Generator

Tatsan Kantasit (Scooter)

Team Lead UI Designer, Partners Liaison, MotionInput Developer, Front-End Developer, Researcher

Jia Yi Chia

Programmer UI Evaluator, Front End Developer, Video Editor