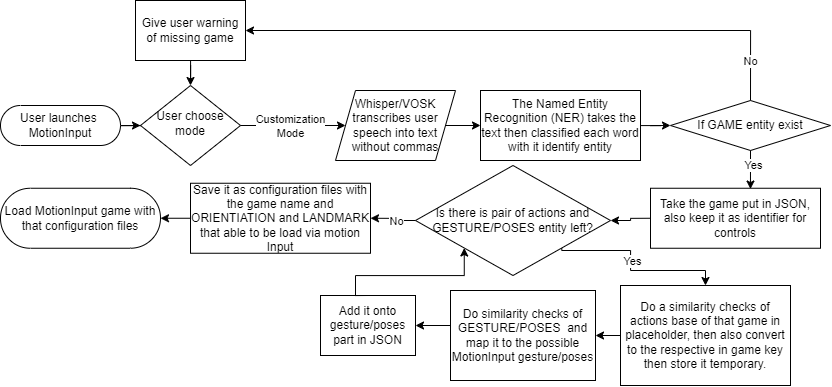

Flowchart

Intended Usage

Designing the System

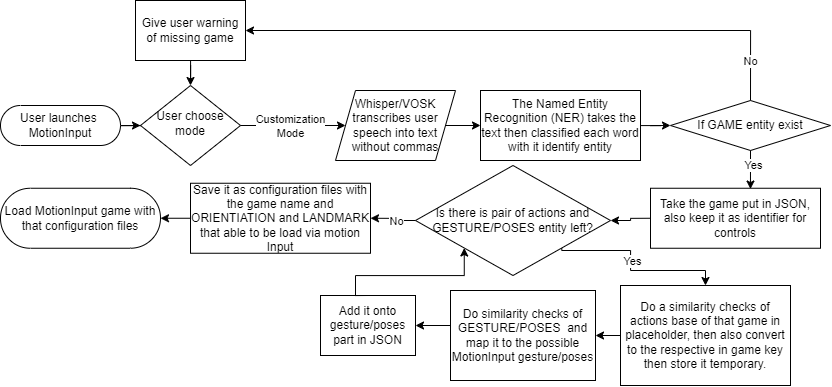

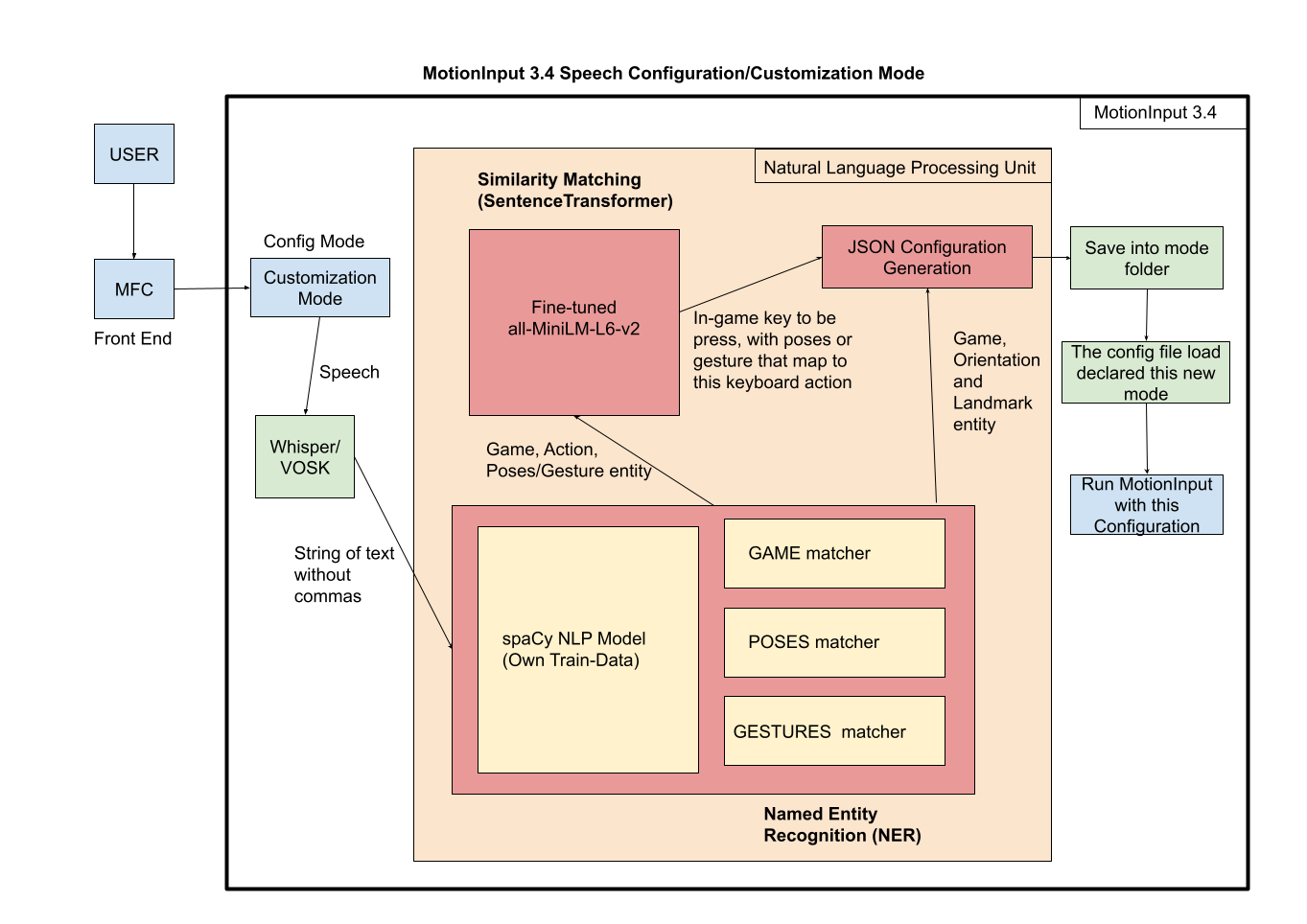

This segment of the MotionInput system leverages advanced NLP techniques to allow users to customize their gaming controls using voice commands. The system architecture diagram illustrates the sophisticated process of converting spoken words into actionable game controls, enhancing accessibility and gaming experience.

Users interact with the system first by using the front-end provide by MotionInput of Microsoft Foundation Class (MFC) to select this customization mode then they can enter their speech via voice commands. These commands are raw audio data that need to be processed and understood by the system. The "Whisper/VOSK" component represents a voice recognition system that transcribes spoken words into text without requiring any specific syntax (i.e., the absence of commas).

The core of the system is the NLP unit, which processes the transcribed text.

After processing the voice command, the system generates a JSON configuration file. This file includes the in-game key mappings and recognized entities such as game names, orientations, and body landmarks. It is here that the NER outputs are translated into actual control configurations. The Mode Config is a byproduct of this process, possibly a mode state or profile that the user can switch to within the MotionInput system..

Once the JSON file is generated, it is used to reconfigure the MotionInput system, mapping voice commands to game controls. While the diagram does not show the explicit use of this JSON file, it implies that the user would then run MotionInput with the newly created configuration. The output to the user can be ambiguous, represented by the cloud symbol in the diagram, indicating that the user will experience the effects of the configuration through the updated behavior of the MotionInput system. This dynamic loading the JSON format is essentially part of our MotionInput seniors Joseph dissertation works which he integrated our part along with it.

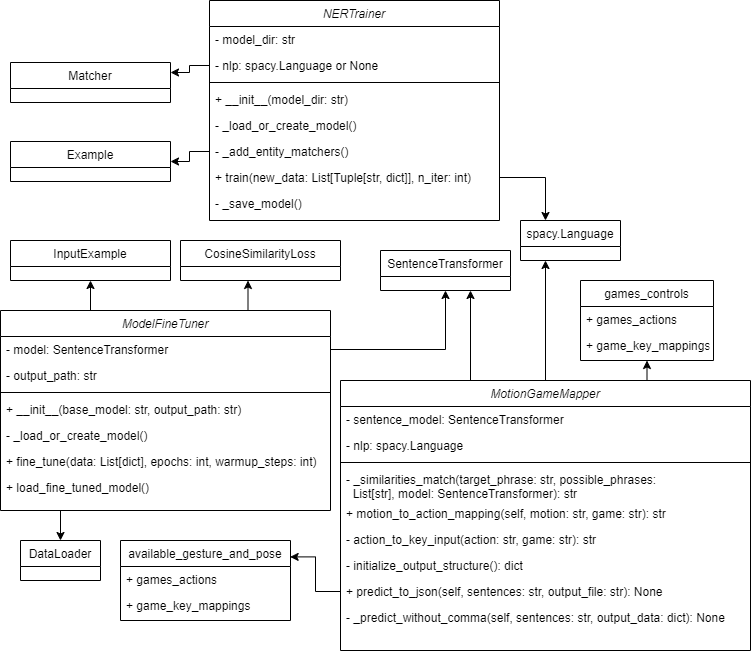

__init__: Constructor initializing the SentenceTransformer with the specified base model and setting the output path for the fine-tuned model.prepare_data: Converts a list of dictionaries containing sentence pairs and similarity scores into a DataLoader with InputExample objects.fine_tune: Fine-tunes the model on the provided dataset and saves the fine-tuned model to the output path.load_fine_tuned_model: Loads the fine-tuned model from the output path into the model attribute.SentenceTransformer, InputExample, and losses.CosineSimilarityLoss from the sentence_transformers library.DataLoader from the torch.utils.data library for creating training data batches.pandas.DataFrame for handling and converting the input data into the format required for model training.__init__: Constructor initializing the SentenceTransformer model and spaCy NER model._similarities_match: Static method that calculates the cosine similarity between a target phrase and a list of possible phrases using embeddings.motion_to_action_mapping: Maps a user motion to an in-game action using the similarity match function.action_to_key_input: Static method that maps a game action to the corresponding keyboard input.initialize_output_structure: Static method that initializes the data structure for the output JSON.predict_to_json: Processes sentences to predict game-related actions and outputs to a JSON file._predict_without_comma: Processes input sentences to identify actions without the need for comma separation.SentenceTransformer for sentence embeddings.spacy.Language for NER processing.games_actions, game_key_mappings, available_gestures, and available_poses for data processing.__init__: Constructor that initializes the model_dir and sets up the NER model by calling _load_or_create_model and _add_entity_matchers._load_or_create_model: Private method that either loads an existing spaCy model from the specified directory or creates a new one if it doesn't exist._add_entity_matchers: Private method that adds custom entity matcher components to the NLP pipeline if they are not already added.train: Public method that trains the NER model using the provided training data and iteration count. It shuffles the data, updates the model with the examples, and then saves the model using _save_model._save_model: Private method that saves the trained NER model to the disk.spacy.Language class.spacy.training.Example for training data examples.spacy.matcher.Matcher and spacy.util.filter_spans for entity matching functionality.TRAIN_DATA for training purposes.