UI Design

Human-Computer Interactions

Table of Contents

User Interviews

Interview 1 - Client

How would you like users to interact with our section of MotionInput?

Imagine a user saying, "I want to play Minecraft with my right arm and want to jump when I pose my thumb down, and I want to do an index pinch to place a block down." This verbal command would directly create a configuration file that runs MotionInput with Minecraft, recognizing the right hand as the control landmark. When the user performs these specified actions, they're translated into in-game movements without the need for a traditional UI. This approach isn't just an alternative but an extension to the MotionInput system, leveraging the existing framework developed by other teams. It's crucial that if a word or command isn't directly related to in-game actions, there should be no matching. Furthermore, this system is designed to be extensible, accommodating additional games integrated into MotionInput in the future.

Are there specific things that I should avoid to prevent disrupting MotionInput?

Yes, it's imperative that the model we develop is lightweight to seamlessly fit within the MotionInput ecosystem. This means it needs to be compact enough to operate efficiently on a laptop, given that MotionInput functions offline. It's essential that the model resides on the user's device and does not rely on external services like ChatGPT, which require an internet connection. Ensuring the model's efficiency and self-containment will prevent any disruptions to MotionInput's performance and user experience.

Interview 2 - User

Do you prefer long or short sentences when giving voice commands?

I prefer using long sentences. It's because I'm usually in a hurry to get things set up the way I want, and long sentences help me convey exactly what I need all at once. Using short sentences feels more like I'm just following instructions, rather than having a tool that adapts to my needs and understands me. It's important that the system can grasp the whole context of what I'm saying in one go, making the process feel more like a conversation and less like a series of commands.

Do you know every specific term related to each game?

I know the controls and actions for games like Minecraft pretty well, but I might not always remember to use the standard terms for each command. Sometimes, I find it easier to use synonyms or describe the action in my own words. It would be great if the system could understand these variations and interpret them correctly, matching my description to the actual game control. This flexibility would really help, especially since remembering the exact terminology for every action can be challenging. It's about making the system smart enough to know what I mean, even when I don't use the exact game-specific term.

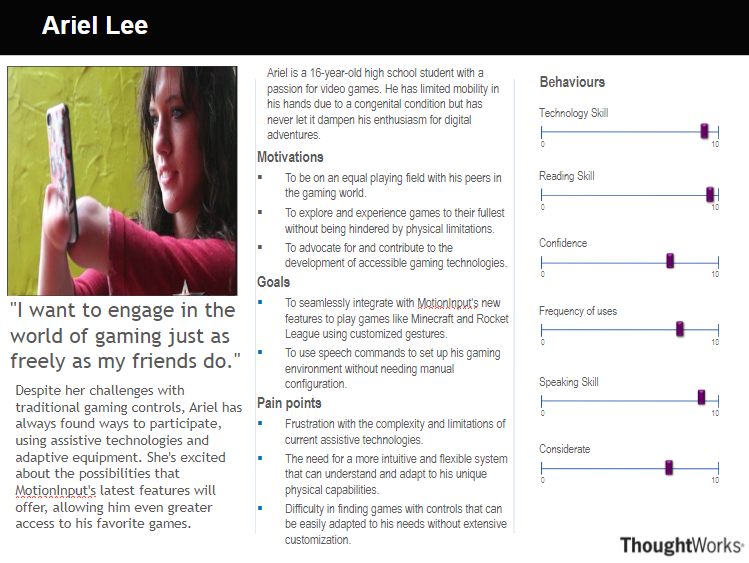

Personas

Sketches

Not Applicable since MotionInput already provides that

Initial Prototype

Prototype Objectives

The initial prototype aims to establish a foundational approach for entity recognition within texts, identifying key entities such as games, actions, and other relevant terms without using the custom-trained models. This stage utilizes spaCy's pre-trained models to recognize various entities, providing a baseline for understanding how entities are extracted and laying the groundwork for future customization and refinement.

Code Snippets and Explanations

Importing Necessary Libraries

import spacy

import jsonLoading spaCy's Pre-trained Model

nlp = spacy.load("en_core_web_sm")Entity Recognition Function

def recognize_entities(sentence):

doc = nlp(sentence)

entities = []

for ent in doc.ents:

entities.append({"text": ent.text, "entity": ent.label_})

return entitiesExample Usage

sentence = "I want to play Minecraft."

entities = recognize_entities(sentence)

print(json.dumps(entities, indent=2))Discussion

The initial prototype serves as a crucial step in the development process, providing insights into the capabilities of pre-trained models for entity recognition and setting a baseline for future enhancements. It highlights the importance of starting with a simple, manageable scope that can be incrementally improved upon, especially when developing complex systems like NER models tailored to specific domains. As the project progresses, the insights gained from this prototype will guide the customization of the NER model, including the creation of domain-specific entity types and the training of the model to accurately recognize them. This iterative approach ensures a solid foundation for the project, allowing for focused improvements and refinements based on initial findings and feedback.

Prototype Evaluation Table

| Criteria | Problems | Solution | Severity (0-4) |

|---|---|---|---|

| 1. Contextual Understanding | Difficulty in comprehending long, contextual sentences for voice commands. | Enhance NLP capabilities to parse and understand long sentences, ensuring the system grasps the entire context. | 3 |

| 2. Terminology Variance | Inconsistent recognition of synonyms or varied terms used in voice commands. | Implement a synonym recognition module that can map user-described actions to standard game controls. | 2 |

| 3. Speech-to-Configuration Efficiency | Slow conversion of verbal commands into a functional configuration file. | Optimize the processing pipeline to quickly generate configuration files from spoken instructions. | 1 |

| 4. System Extensibility | Limited support for additional games and actions in the voice-command system. | Develop a modular design that allows for easy integration of new games and actions into the system. | 2 |

| 5. Lightweight Model Design | Current model too heavy, causing latency and inefficiency for offline use. | Refactor the model to streamline its architecture, ensuring it remains lightweight and efficient for laptops. | 3 |

| 6. Offline Functionality | Dependency on external services for voice command interpretation. | Create an offline-capable voice processing unit to ensure the system can operate without internet access. | 4 |