Architecture

Architecture

The Depth Sensing Surgical System that team 19 have designed and built underwent two design phases before it was constructed:

-User Interface Design

-Object-Oriented Design

The object-oriented design phase was an important turning point in the project, for if we were to use a poor design for this system then it would make it extremely difficult for future developers to add to our work as they would have to work out how everything worked before they could add anything to it.

As such, our goal was to develop an architecture that was simple to understand, but above all easy to add to and develop so that future developers could easily improve on our system.

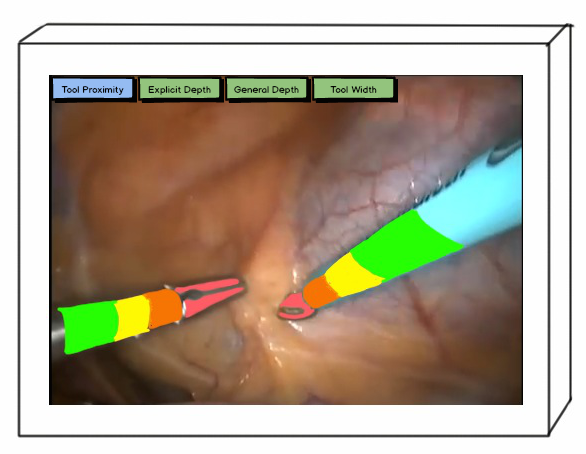

Our designs for the system were to have the main camera feed present on the screen at all times, and several buttons which when pressed would apply visual augmentations to the camera feed which would intuitively provide depth feedback to the surgeon so that he could judge the whereabouts of his tools in relation to the body. A sketch of what this may look like is shown below.

As can be seen, the different augmentations available to the surgeon are shown along the top of the camera feed. The main part of our design was these augmentations - they are the things that will actually give depth feedback to the surgeon, and they are what the team hopes future developers would focus on - coming up with new augmentations and new intuitive ways to present depth feedback to the surgeon.

So our challenge when designing the architecture of this system was this: how do we design software that is easy for future developers to understand and add to, and that will allow us to change the behaviour of the system at runtime, when the augmentations are changed? The answer was to use a well known design pattern: the Strategy pattern.

The Strategy Design Pattern

The Strategy pattern is simple to understand and powerful to use. It’s purpose is to allow a program’s behaviour to change at runtime; such as when a user selects an option that would augment a camera feed, as with the Depth Sensing Surgical System, then the augmentation algorithm would be one of several “strategies”, each of which applies a different augmentation to the camera feed. Another example would be a computer playing chess, when the user selects the difficulty level then each different level could be thought of as a separate strategy as the computer’s behaviour will changed based on the option that has been selected.

So how does the strategy pattern allow behaviour to be changed at runtime? Quite simply, it will name a family of algorithms through use of an interface, for example an interface for a chess playing algorithm may be called “IChessDifficulty”. The developers building the system would then design several different algorithms that correspond to different levels of difficulty and all implement this interface, for example “EasyDifficulty implements IChessDifficulty” or “HardDifficulty implements IChessDifficulty”.

After doing this, in the main class of the chess playing algorithm, there will be an assignment that determines the difficulty of the chess algorithm, and it would look something like: “IChessDifficulty difficulty = null;”. When the program is run, the user would select a difficulty level and it would be assigned to this “difficulty” object. As all of the different difficulty strategies implement the “IChessDifficulty” interface, any one of them can be assigned to the “difficulty” object. For example, if the user selects Hard difficulty, then the following line would appear: “difficulty = new HardDifficulty()”. If the user then decides that Hard is too difficult, they could easily change the difficulty to Easy, at which point the following line would run: “difficulty = new EasyDifficulty()”. The same IChessDifficulty object is being used to reference the different strategies that implement this interface, meaning that the behaviour can be changed at runtime.

Depth Sensing Surgical System and the Strategy Pattern

The team wished to apply this strategy to our system, because if each augmentation was an instance of an “IVisualAugmenter” strategy, then changing which augmentations were being applied to the camera feed at runtime would be easy. As the team set about designing this, it was realised that the strategy pattern could be applied to more than just the visual augmentations. It could be applied to ToolIdentifiers so that future developers could easily come up with new methods of identifying surgical tools and simply make them instances of the ToolIdentifier strategy and they would work with the system. It could also be applied to Controllers, which would be different strategies that control the system. It was useful to have strategies in the Controller case because then the system could be controlled in multiple ways, for example through voice control or through simple click commands.

It may be considered excessive to apply strategies to the controls of the system as well, but considering that it could be possible to control the system by clicking at the endoscope terminal, using voice commands or remotely through an application, there is a stronger argument that it is not excessive. This particular argument will be discussed further in the Evaluation section.

Use of the Strategy design pattern meets all of our goals for the architecture of this system. It allows future developers to easily develop new VisualAugmenter algorithms that they simply add to the VisualAugmenter folder of the system, add a button to the UI to turn their augmentation on and add an event handler for the button. That is all they need to do to develop new VisualAugmentations, ToolIdentifiers and Controllers, the process is remarkably simple. There is no need to try and understand every bit of code to add to it, simply create a class that implements one of the strategy interfaces and set one of the UI buttons to turn it on and off. This meets the team’s goal of having an architecture that is easy to understand for future developers and even easier to add new strategies to.

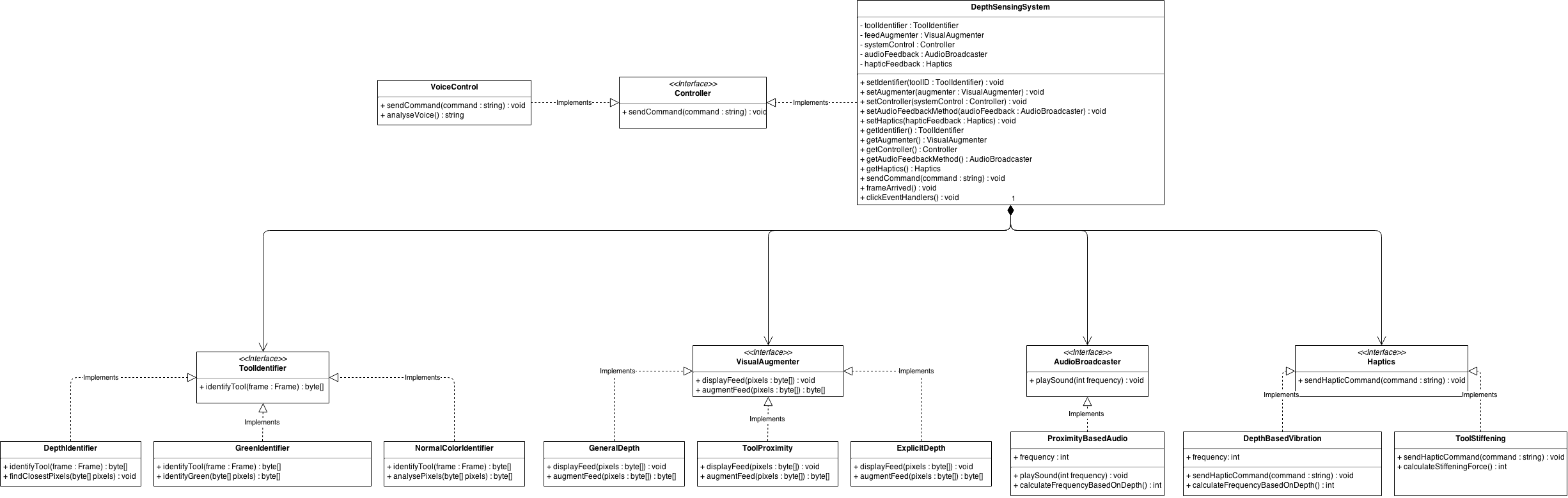

Architectural Diagram

Below is a UML class diagram created by the team that illustrates the Object Oriented design of the Depth Sensing Surgical System and its use of the strategy design pattern. The team designed this diagram so that it could be used by future developers as a guide for development, because the team will not be able to implement every strategy shown in the diagram, such as Haptics or AudioBroadcaster. These are included in the design so that future developers can see how these things would fit into the system architecturally, and it would be relatively easy for them to add the functionality.

One of the biggest demands from the team’s clients was some form of haptic feedback, and unfortunately the team cannot implement this itself due to lack of time, but it has allowed for it in its object oriented design so that it would be easy for future developers to add this feature in.

There are five different strategies here that can be implemented by the system, although the team will not be implementing all of them. The ones that the team will implement are:

-VisualAugmenter

-ToolIdentifier

-Controller

The team will focus on VisualAugmenter strategies, as this is the most important aspect of the system, Controller and ToolIdentifier strategies after this. Below is a description of every class and component of every class shown in the UML diagram.

Class Description

The UML diagram contains several classes, such as a Haptics strategy and corresponding concrete implementations, that will not be implemented in our final system.

The reason these classes are included is to illustrate how they would fit into the system should they be added at a later date. Classes that we are not likely to be implementing will have their name highlighted in red. Ones that will be implemented if there is time are highlighted orange.

DepthSensingSystem implements Controller

Instance Variables

toolIdentifier : ToolIdentifier : an instance of the ToolIdentifier strategy, which is used to analyse each frame received from the Kinect and identify the tool in the frame.

feedAugmenter : VisualAugmenter : an instance of the VisualAugmenter strategy, which can be changed by commands from instances of the Controller strategy. Each instance of VisualAugmenter alters the appearance of the feed from the Kinect to intuitively display information, for example colouring the feed according to depth (GeneralDepth).

systemControl : Controller : an instance of the Controller strategy, which changes which visual augmentations the system is displaying.

audioFeedback : AudioBroadcaster : an instance of the AudioBroadcaster strategy, which can provide depth information via audio feedback.

hapticFeedback : Haptics : an instance of the Haptics strategy, which will provide haptic feedback in different ways based on depth information received from the Kinect.

Methods

setIdentifier(toolID : ToolIdentifier) : void : sets the ToolIdentifier strategy used by the system, which will determine how the tool is identified.

setAugmenter(augmenter : VisualAugmenter) : void : sets the VisualAugmenter strategy used by the system, which will determine how the feed is augmented to display depth information.

setController(systemControl : Controller) : void : sets the method of control used by the system. Concrete implementations of control strategies will allow the system’s behavior to be changed at runtime, for example by chaning the visual augmentation or the method of tool identification.

setAudioFeedbackMethod(audioFeedback : AudioBroadcaster) : void : sets the strategy used to provide audio feedback by the system.

setHaptics(hapticFeedback : Haptics) : void : sets the strategy used to provide haptic feedback by the system.

getIdentifier() : ToolIdentifier : returns the ToolIdentifier instance that the system is currently using to identify tools in the camera feed.

getAugmenter() : VisualAugmenter : returns the VisualAugmenter instance that the system is currently using to identify tools in the camera feed.

getController() : Controller : returns the Controller instance that the system is currently using to change which other strategies it is using.

getAudioFeedbackMethod() : AudioBroadcaster : returns the AudioBroadcaster strategy instance currently being used by the system to provide audio-based depth feedback.

getHaptics() : Haptics : returns the Haptics strategy instance that the system is currently using to provide haptic feedback.

sendCommand(command : string) : void : runs the appropriate method as determined by the command received. This class implements controller because it will handle clicks, in the case that voice control fails.

param: string command : the command sent by the click event handler, telling the system how to act.

frameArrived() : void : an event handler specific to the Kinect. The event is triggered when a new image is received by the Kinect, at a rate of 30 per second, and will launch image analysis and augmentation action by the relevant strategies, before displaying that image on the screen.

clickEventHandlers() : void : represents a group of methods that will handle click events from the various controls on the HUD.

Controller

‹Interface›

Methods

sendCommand(command : string) : void : abstract method to be implemented. Method that sends the command to be executed. For example if the user clicks a button to activate a new feed augmentation, this method will run.

param: string command : the command to execute.

VoiceControl implements Controller

Methods

sendCommand(command : string) : void : method that sends the command to be executed.

param: string command : the command to execute.

analyseVoice() : string : analyses the voice commands as they are received, extracting the command that the user has spoken.

return: string command : the extracted command that the user has spoken.

ToolIdentifier

‹Interface›

Methods

identifyTool(frame : Frame) : byte[] : abstract method to be implemented by all concrete ToolIdentifier strategies. This method will be used to identify which pixels are part of the tool in the image received from the Kinect.

param: Frame frame : the image acquired by the Kinect. Contains both color and depth information.

return: byte[] newImage : after the frame has been analysed, it will be in the form on an array of bytes. This array can then either be passed to other methods for further analysis or augmentation, or directly displayed on the feed.

DepthIdentifier implements ToolIdentifier

Methods

identifyTool(frame : Frame) : byte[] : concrete implementation of method inherited from the interface.

findClosestPixels(byte[] pixles) : void : method to analyse the depth information provided by the Kinect, which finds which pixels are closest to the camera and thus most likely to be a part of the tool.

param: byte[] pixels : the depth image provided by the Kinect in the form of an array of pixels. The image is analysed by iterating over this array.

GreenIdentifier implements ToolIdentifier

Methods

identifyTool(frame : Frame) : byte[] : concrete implementation of method inherited from the interface.

identifyGreen(byte[] pixels) : byte[] : iterates over the the image array provided by the Kinect and identifies which pixels are bright green, and thus part of the tool.

param: byte[] pixels : the color image provided by the Kinect in the form of an array of pixels. The image is analysed by iterating over this array.

return: byte[] newPixels : a new image array with the bright green pixels of the tool identified.

NormalColorIdentifier implements ToolIdentifier

Methods

identifyTool(frame : Frame) : byte[] : concrete implementation of method inherited from the interface.

analysePixels(byte[] pixels) : byte[] : iterates over the image array provided by the Kinect and identifies the surgical tool in the camera view based on its colour.

param: byte[] pixels : the color image provided by the Kinect in the form of an array of pixels. The image is analysed by iterating over this array.

return: byte[] newPixels : a new image array with the tool identified.

VisualAugmenter

‹Interface’

Methods

displayFeed(pixels : byte[]) : void : abstract method to be implemented by concrete strategies. Used to display the camera feed after it has been augmented according to the strategy.

param: byte[] pixels : the image array that will be displayed by the system.

augmentFeed(pixels : byte[]) : byte[] : abstract method to be implemented by concrete strategies. Used to augment the image according to depth information.

param: byte[] pixels : the image provided by the Kinect in array form. This array will be altered to produce the augmentation.

return: byte[] newPixels : the augmented image in the form of an array of pixels which will be displayed.

GeneralDepth implements VisualAugmenter

Methods

displayFeed(pixels : byte[]) : void : used to display the camera feed after it has been augmented.

param: byte[] pixels : the image array that will be displayed by the system.

augmentFeed(pixels : byte[]) : byte[] : augments the feed to be displayed. Specifically, GeneralDepth colours all pixels according to how far away they are from the camera, with green pixels being further away and yellow, orange and red pixels being close, with red being the closest.

param: byte[] pixels : the image provided by the Kinect in array form. This array will be altered to produce the augmentation.

return: byte[] newPixels : the augmented image in the form of an array of pixels which will be displayed.

ToolProximity implements VisualAugmenter

Methods

displayFeed(pixels : byte[]) : void : used to display the camera feed after it has been augmented.

param: byte[] pixels : the image array that will be displayed by the system.

augmentFeed(pixels : byte[]) : byte[] : augments the feed to be displayed. Specifically, ToolProximity colours the surgical tools based on how close they are to the body tissue. When a tool is close or touching, it turns red, and when in is further away from the tissue it is coloured green.

param: byte[] pixels : the image provided by the Kinect in array form. This array will be altered to produce the augmentation.

return: byte[] newPixels : the augmented image in the form of an array of pixels which will be displayed.

ExplicitDepth implements VisualAugmenter

Methods

displayFeed(pixels : byte[]) : void : used to display the camera feed after it has been augmented.

param: byte[] pixels : the image array that will be displayed by the system.

augmentFeed(pixels : byte[]) : byte[] : augments the feed to be displayed. Specifically, explicit depth measure how far away the functional part of the tool is from the body, and then paints this information on the screen in a small box next to the tool’s functional part, notifying the surgeon exactly how far away they are from the body.

param: byte[] pixels : the image provided by the Kinect in array form. This array will be altered to produce the augmentation.

return: byte[] newPixels : the augmented image in the form of an array of pixels which will be displayed.

AudioBroadcaster

‹Interface›

Methods

playSound(frequency : int) : void : abstract method to be implemented by concrete strategies. Plays a sound at a frequency according to the frequency parameter.

param: int frequency : the frequency with which the sound will be played by the system.

ProximityBasedAudio implements AudioBroadcaster

Instance Variables

frequency : int : the frequency with which the sound should be played by the system.

Method

playSound(frequency : int) : void : a sound at a frequency according to the frequency parameter.

param: int frequency : the frequency with which the sound will be played by the system.

calculateFrequencyBasedOnDepth() : int : calculates the frequency with which the sound should be played. With this class, the closer the surgical tools are to the body, the more frequently the sound is played, similar to a parking sensor.

return: int frequency : the frequency with which the sound should be played.

Haptics

‹Interface›

Methods

sendHapticCommand(command : string) : void : abstract method to be implemented by concrete strategies. Sends a command to the haptic features of the system, telling them how to behave.

DepthBasedVibration implements Haptics

Instance Variables

frequency : int : the frequency at which the haptic controls should vibrate.

Methods

sendHapticCommand(command : string) : void : sends the command to be executed to the haptic controls.

param: string command : the command telling the haptic controls how fast they should vibrate.

calculateFrequencyBasedOnDepth() : int : calculates how fast the haptic control should vibrate. This class would allow haptic controls to vibrate based on how far away the surgical tools themselves are from the body, with vibration speed increasing the closer the tools get. This methods calculates the frequency of this vibration.

return: int frequency : the frequency with which the haptic controls should vibrate.

ToolStiffening implements Haptics

Causes the surgical tools to stiffen in the surgeon’s hands according to whether the tools are touching the body tissue.

Methods

sendHapticCommand(command : string) : void : sends the command to be executed to the haptic controls, in this case whether the tool should stiffen in the surgeon’s hands.

param: string command : the command sent to the haptic controls to tell them whether the surgeon’s tools should stiffen in their hands.

isTouching() : bool : measures how close the tool is to the body, and if it is deemed to be touching the body returns “true”, to tell the tool to stiffen in the surgeon’s hands.

return: bool touching : says whether the tool is touching the body tissue. True if touching, false otherwise.

Changes at Implementation

It is said that no plan survives first contact with implementation, and this design was no exception, however no major changes were made. When one examines the Depth Sensing Surgical System code, they will notice that some of the class names are different to ones shown in the UML diagram. This is mostly due to the fact that C# naming conventions were not taken into account when creating this UML diagram, so names like “VisualAugmenter” became “IVisualAugmenter” in the actual code of the system.

The largest difference between the UML diagram and the system that the team actually built is that the team decided not to implement any ToolIdentifier strategies at this time. The reason for this is that one of the team’s earlier decisions was to use bright green colours on all of our props which would be used to identify them, similar to a green screening effect; the reason for this being that it was too difficult for the team to implement something that could recognise the surgical tools without the green markers on them. By the time the time came to constructing the final system, all of the visual augmentation classes already had tool identification built into them where they were scanning for fluorescent green and then applying the visual augmentations to this, so trying to separate the tool identification here into a separate ToolIdentifier strategy was not worth the time it would have taken, considering that the visual augmentations were already working without separating the strategies and separating them may have damaged their functionality in the resulting confusion.

The team has decided to keep the ToolIdentifier strategy folder in the system code for the sake of future developers. These developers can create tool identification algorithms that scan through the image array provided by the camera and then return the indexes of the pixels in this array that make the tool or tools. This array can easily then be passed to a visual augmentation algorithm that applies the augmentation to pixels at the indexes specified in the array returned by the ToolIdentifier strategy. Ideally, the team’s visual augmentation strategies would have also done this, but as the augmentations were already developed by the time the final system was constructed it was impractical to separate the two jobs. However, the ToolIdentifier strategy still exists for the sake of future developers.