Surgical Vision Group

Surgical Vision Group

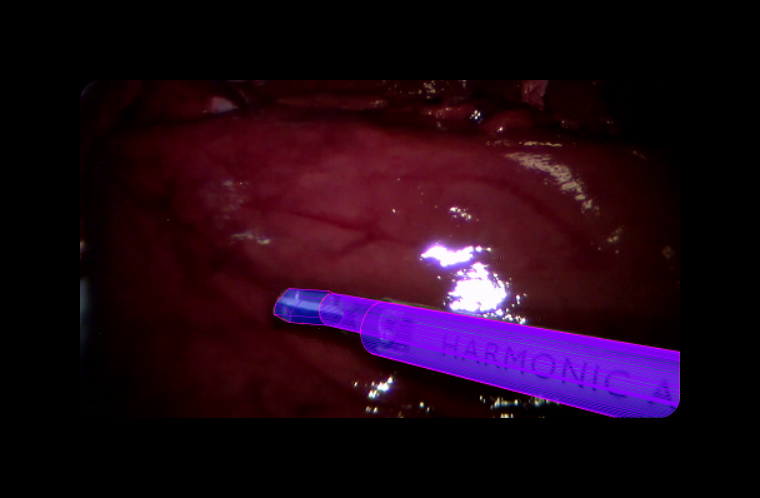

Around the middle of January this year the team discovered by sheer luck with a Google search the Surgical Robot Vision Research Group, which is conducting research into robotic surgery and was working on many of the same problems that the team was having, such as tool identification in the camera feed. This group had already perfected an algorithm to identify surgical tools in a camera feed and then track their position, the output of one such algorithm is shown below:

As can be seen, the surgical tool has been fully identified and an image overlaid onto it. The claspers at the end of the tool have even been identified as different to the main body of the tool using this algorithm.

The Surgical Robot Vision Research Group is a UCL based research group. The team discussed with supervisor Dr Loudres Agapito about going to meet this group, and she introduced us and set up a meeting. The team therefore went to meet the Group to discuss the Depth Sensing Endoscope project and receive advice, particularly on the subject of tool identification.

The team met with the Group leader Dan Stoyanov to discuss the project. He expressed concern about the viability of using green markers on tools to identify them in an actual deployable system, as putting markers on surgical tools in practice would not be possible. The team explained that this was a temporary measure used only in this project for demonstration purposes, and were the system to be deployed then a more advanced tool identification algorithm would be developed that did not require physical markers. Dan was also of the opinion that attempting to model a surgical system using a Kinect sensor would be difficult, because any test scenarios constructed would have to be very large to fill the Kinect’s field of view, and any props that would act as surgical tools would have to be significantly scaled up to allow the Kinect to apply the augmentations to them. However, as the Kinect is the only depth sensor available to the team, we must use this and create robust enough algorithms that interference from everyday objects that may be in the camera view as well as our props will not affect the running of the algorithm.

At this point, the team was introduced to Max Allan who did most of the Group’s work on tool identification that produced the image shown above. The team had a brief discussion with him about how he did this tool identification algorithm, to see if it could be applied to the Depth Sensing Endoscope project. The method used in the above picture used a machine learning algorithm to identify the tool, where at each pixel a series of decisions were made to decide whether that pixel belonged to the tool or the patient’s body.

Following this, the team were taken to see some of the Group’s equipment that they used for research purposes. Our clients had talked about the Da Vinci surgical robot, which is potentially a target for the Depth Sensing Surgical System after deployment. The Surgical Robot Vision group owns a Da Vinci robot that they use for testing their various research projects, which they took us to see and trial. A picture of the Da Vinci robot is shown below:

The Da Vinci robot consists of three separate units: the patient-side cart, a processing terminal and the control unit, which is not pictured here. Getting to use a Da Vinci surgical robot gave the team first hand experience of why this project would be so useful to surgeons if deployed.

The team’s challenge was to remove fake suture from a small plastic model. Although the Da Vinci robot provides a 3D view of the operational area, it was still extremely difficult to judge how close the tools of the robot were to the plastic model, and every member of team thought that their claspers were inline with the suture, but as soon as they closed the claspers to try and remove the suture, it turned out that the claspers were still a few centimetres above the suture, even though they looked like they were in line with it. It is no wonder that it takes so much training to use one of these robots when it was that difficult to judge depth, even with a 3D view. The Depth Sensing Surgical System, if applied to this robot, would have eliminated all of this difficulty.

Using the Da Vinci system assured the team that the right augmentations had been chosen to be implemented, as any of them would have made the task we had just attempted far easier, particularly the ToolProximity augmentation that would have informed the user when the clasper was getting close to the piece of suture.

Following the meeting with the surgical vision group, the team remained in contact with Max Allan, discussing how tool identification without the need for green markers may be implemented. He gave us several recommendations.

The first of these was to record a load of videos from the Kinect that show the tool moving around, and then manually label the tool in the images. The labelled data could then act as an input into a machine learning algorithm to recognise the tool, or could be used as a validation data set to check that tools were correctly identified by the machine learning algorithm.

The team was advised that although we are only interested in the position of the tip of the tool in relation to the body, it would be better if we attempted to track the whole tool because this would give us more constraints on our estimation of where the tool is in the camera feed. Following this, Allan pointed us in the direction of a few more established methods that could be made use of to identify the tool, such as Spectral Clustering.

It was unfortunate that the team only began correspondence with Max Allan towards the later stages of the project, so does not have the time necessary to research and implement the methods recommended by him as they are fairly advanced and would take some time to get working. However, it is extremely useful to have this information so that it can be passed on to future developers as information on the ways in which we were considering advancing the project. Armed with our research and recommendations about what to do next, future developers should be able to quickly add advanced features to the project without needing to start from scratch, such as algorithms to identify the tools without the need for physical markers on them.

The advice given to the team by the Surgical Robot Vision Group has been extremely valuable and the team would like to offer sincerest thanks to the Group, particularly to Max Allan and Dan Stoyanov for sparing their time to help us advance our project.

The Surgical Robot Vision Research Group’s website can be found at: http://www.surgicalvision.cs.ucl.ac.uk