Limitations of the Kinect

Limitations of the Kinect

Throughout this project, the team has been using a Kinect 2.0 sensor to design the proof of concept system. The Kinect is suitable for this role because it is easy to program using C# and easy to hardware hack so that it can be programmed from a laptop. It also has excellent, high-resolution depth sensing ability and a top quality microphone array.

However, there are several major limitations to the Kinect that mean many aspects of the system that has been built could not be tested realistically.

The Kinect has no depth sensing ability at any distance less than 50 centimetres, but anything under at least a metre results in a “doubling” effect when mapping the depth to the colour image - there appears to be two of everything. The maximum distance that an endoscope would be away from the operational area in the body is 10 centimetres. With the Kinect only being accurate at over a metre of distance, a massive area is captured by its camera. There was approximately an area of 1.8 x 1.5m in view of the Kinect when it was 1.6m away from the nearest wall. This means that it is extremely difficult to build test scenarios for the Kinect, and building something like a model of the body is out of the question because it would have to be extremely large to allow the Kinect to function in it. Indeed, Dan Stoyanov of the Surgical Robot Vision Research Group at UCL expressed concern at the difficulty of creating a realistic surgical scenario with a sensor as large as the Kinect.

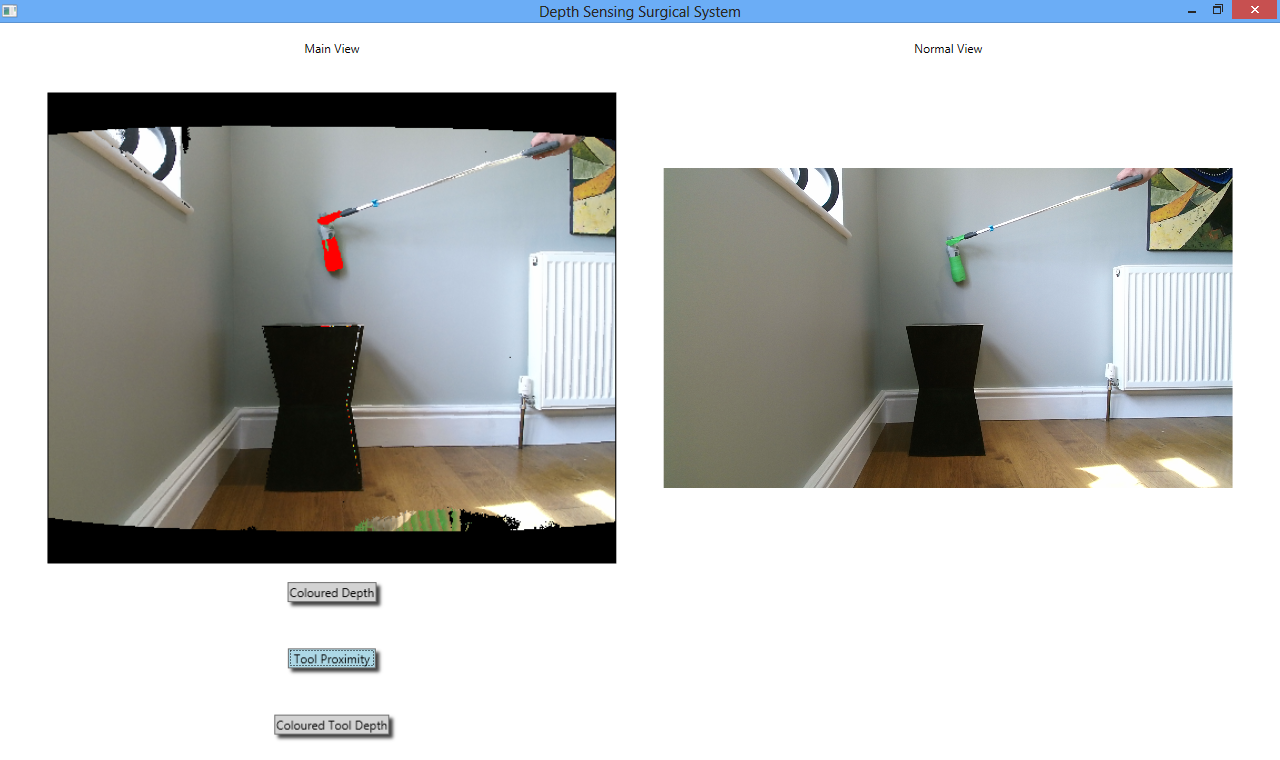

The size of the area captured by the Kinect also introduces difficulty. This is because the tools, even when scaled up like with the grabber arms that the team used, look extremely small in the camera view from the Kinect relative to the size of the picture. When a Kinect image is compared to an actual surgical image the difference is striking:

As can be seen, the difference in the apparent size of the tool is significant. In the top picture, taken from the Kinect, the grabber arm looks small as does the bottle. However when this is compared to the lower image which is from actual laparoscopic surgery, it can be seen that the tools themselves cover a much larger proportion of the screen.

The Kinect sensor is a hindrance here because as soon as it is moved closer to the test scene to make the size of the props equivalent to actual surgery, its depth sensing ability no longer works. The team is already using the largest props that is can get, anything larger and it would become cumbersome and difficult to test the system.This issue is one of the main limitations of the Kinect, but is to be expected; after all the Kinect was designed to capture multiple people in its view and track their movements to control an Xbox One, not look at tools that are much smaller than people as if it were an endoscope inside a body.