Goals

Goals

When one thinks of the future of medical technology, often images of autonomous robotic surgeons, nanotechnology and ultra-advanced cure-all drugs are brought to mind. Hopefully, these things will one day become reality. However, all major development needs a starting point, and it is to that starting point that this project hopes to contribute.

Creating a prototype of a depth-sensing endoscope and its corresponding software will allow the path to be laid for further development, potentially leading to true immersion into the operational area with advanced haptic feedback and high-resolution 3D images. Perhaps this technology could even be adapted for autonomous robotic surgeons further down the line of development.

This section will outline what this project hopes to lay the groundwork for and the developments that could be aided as a result of this research, finishing with potential medical advances that could occur way in the future.

In The Short Term

“The Short Term” refers to the end of the module associated with this project, towards the end of March 2015. This section outlines what it is hoped will be achieved by then.

3D Images

The Kinect sensor can be used to produce 3D images. This allows the feed to be rotated to see the operating area from different angles. An example of a coloured point cloud that is used to represent the 3D image is shown below:

The team has not conducted a great deal of research into this feature of the Kinect, and it could be an extremely useful area to research as it would give the surgeon 3D visual feedback. The surgeon could make use of the Kinect sensor here to rotate the image. The Kinect is capable of tracking hand movements, so the surgeon could simply hold their hand up and move it to rotate the image - meaning no equipment would need to be sterile for the surgeon to control the orientation of the feed. The Kinect can also tell whether the person it is looking at has an open or closed fist, so the surgeon could clench their hand into a fist to, in effect, grab the 3D image and then rotate it.

UI Redesign

While the team’s current user interface has achieved the goals that it set out to reach when planning this project, it could be improved on. Its simplicity is at a perfect level as it is - being extremely easy for a surgeon to understand, so should be altered as little as possible. However, certain additions and adjustments could be made that would improve the user interface. For example a help button is needed that can inform the user what the voice control commands were if they forget them.

The system could also be made to follow Norman’s design principles more closely. For example visibility of system status is fairly poor currently, there being no indication that the system is listening for voice commands and there is no text to tell the status of the Kinect or connected depth sensor like in the example programs provided by Microsoft. It would be useful to have a small line of text that updates as the status of the Kinect changes, for example if it is disconnected from the USB port then the text would read “Kinect: disconnected” or if the system was running normally it would read “Kinect: running”. This, and having a small icon to indicate that the system is listening for voice commands would improve the visibility of system status.

Furthermore, the aesthetics of the system could be improved. Right now augmentations are applied by clicking a standard button or speaking a command. These buttons could be replaced with more aesthetically pleasing icons, making the system nicer to use as its looks are improved.

Finally, the voice control of the system could be updated so that the system does not listen for commands unless it hears words such as “system start listening”. This would stop the system from accidentally turning augmentations on and off if the voice commands were uttered in normal conversation. Whether or not the system was listening for commands could be indicated by a small icon that turns green if the system is listening for commands, gray otherwise. This is a trait of Norman’s Constraint design principle, stopping the user from accidentally applying augmentations that they did not mean to.

Add Audio Feedback

A method of providing audio feedback could be added to the system. This would emit a series of beeps that become more and more frequent as the tool approaches the body tissue.

Perfect the Edge Finding ToolProximity Augmentation

The ToolProximity augmentation that relies on edge finding has the most promise of all augmentations created by the team. Unfortunately, its development could not be finished to a high enough standard to demonstrate so a different, more simplistic algorithm was designed. However, if this edge finding version of ToolProximity were properly developed, then it would allow the surgeon to tell how far away tools are from other tools or from small objects in the body such as blood vessels, rather than just the main background (which is all the more simplistic solution is capable of telling the distance from). To see the full details of the ToolProximity algorithm that was eventually implemented, refer to the Development article of this website:

Add New Augmentations

It is a simple task to add new augmentations to the system thanks to the Strategy design pattern implemented by the team. Developing new augmentations to provide depth feedback to the user is a good and useful way to develop the system further.

Add Tool Identification Methods

The team currently relies on a fluorescent green colour on all of its props to identify tools. By adding to the ToolIdentifers family of strategies, new ways of identifying surgical tools, perhaps through depth segmentation or machine learning techniques, can be developed.

With a small amount of redesign of the system, Tool Identification strategies that return an array of indexes of the main image array that are pixels belonging to the tool could be developed, and this array then passed to the visual augmentation strategies which would change the colour of the pixels at the indexes specified by the array received from the tool identification strategies according to depth information.

In The Medium Term

“The Medium Term” refers to development that would take somewhere in the region of six months to complete. These goals could be addressed by future developers who wish to spend a significant amount of time developing the system.

Haptic Feedback

A method of providing haptic feedback to the surgeon could be developed, as this was one of the most requested features of the system by surgeons. This would mean tools would stiffen when touching body tissue, or perhaps a small headpiece would vibrate. This headpiece could also be used to give voice commands to the system, like a bluetooth headset. See the User Interface PDF for more details of this idea.

Multiple Feeds

The UI designed by the team allows the surgeon to apply multiple different augmentations to the camera feed while performing an operation. At the moment, the surgeon can view one augmentation at a time and the normal feed, which is visible at all times, however only on the single screen of the endoscope terminal. In the future, there could be multiple screens each showing a different augmentation, for example one with a normal feed, one with a tool proximity feed and one with a 3D rotatable image.

Robotic Endoscope

It could be possible to create an endoscope that is controllable both by the surgeon and by software. This could mean a flexible camera that could be moved in the body, to make it point in a different direction for example. Control for this could be done by voice or hardware control, but the best way would be to use the Kinect’s motion tracking ability to control where the camera is looking in much the same way as the 3D feed would be controlled - by the surgeon simply holding up a hand and moving it to rotate the feed.

In The Long Term

“The Long Term” refers to development that is potentially years in the future, producing and using completely new surgical techniques. Recommendations for developers willing to put all of their time into developing this system for a substantial amount of time.

3D Vision

Some surgeons, as shown in this video: believe the future may lie in advanced 3D imaging, and surgery through natural orifices which leaves no scar at all. Indeed, 3D imaging does seem like the way forward, and there are a number of different ways, other than simply on a screen, that it could be represented, as detailed below.

Holograms

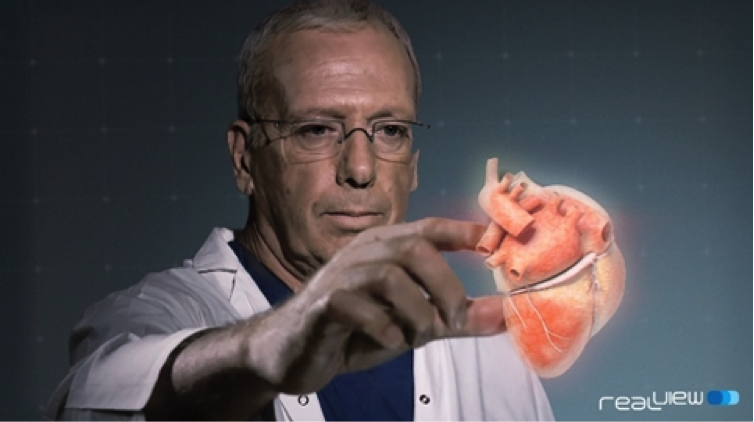

As holographic technology rapidly advances, they may become a viable tool for surgery. Imagine having the operational area displayed like in the Iron Man films:

This picture is purely for illustrative purposes and does not show any kind of real solution to displaying the operating area as a hologram, but it is easy to imagine one. The whole camera feed could be laid out as an interactive hologram in front of the surgeon, who simply uses their hands to rotate the hologram and zoom in and out on certain areas. This would provide a true 3D landscape of the operational area.The surgeon’s hands would be tracked, and when they want to make an incision or anything similar, they do so on the hologram and the corresponding instruments that are actually in the patient would perform exactly the same move, if this technology were applied to robotic surgery. This is shown well by this image of a holographic heart for heart surgery:

The hologram would therefore need to be synced with a surgical robot that performs the operations that the surgeon does on the hologram. The hologram could be produced using an advanced endoscope inserted into the body that scans it and feeds the data to the hologram unit to produce said hologram.

Advanced Haptic Feedback

One of the main issues for surgeons performing laparoscopic or robotic surgery is the lack of haptic feedback. The surgeon cannot feel what they are doing like they would if they were performing open surgery. There are ways to combat this - by vibrations or by tools stiffening when they come into contact with the body. However, the future may hold even more advanced ways of giving haptic feedback.

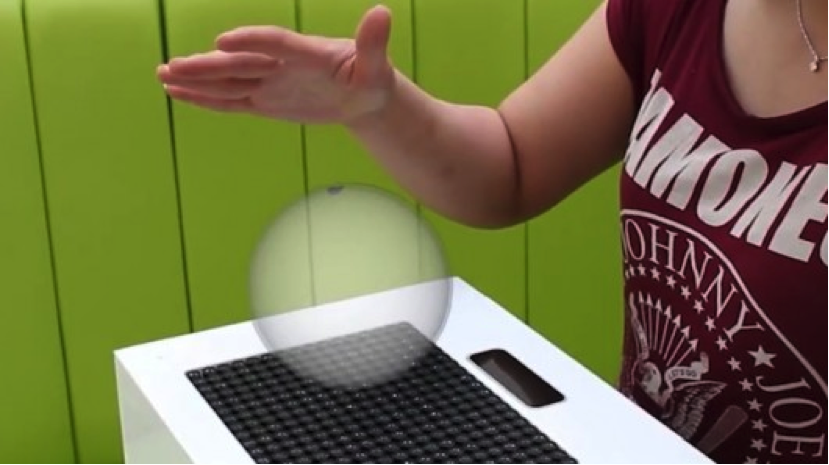

A team of computer scientists at the University of Bristol (full article) have created one such system - that provides haptic feedback by way of ultrasound. This system can create 3D floating shapes that can actually be felt, which has promising implications for surgery in the future:

This system could be combined with a hologram to produce a 3D image of the patient, that can actually be touched, without ever actually touching the patient. The surgeon could actually feel if there are any bumps in a particular area of the body for example, they would not have to rely solely on their judgement from what they can see, nor would they have to perform open surgery on the patient to feel for this kind of thing. They could simply use a highly accurate scan of the body’s interior to produce true haptic feedback using the ultrasound method.

A Realistic Solution

As impressive as it would be to have a full, touchable hologram of a patient, it may not be the viable or convenient to have this as the only method to conduct an operation. Rather, it could be better to have standard controls such as are already used for laparoscopic surgery, and then when a surgeon needs to see an area zoomed in or wants to touch something to see what it feels like without having to open the patient up, the hologram / haptic shape combination could appear on a terminal next to them, allowing them to properly examine the area they are concerned with.

A method of control such as this has other promising implications for robotic surgery. Currently, when using a system such as the Da Vinci robot, the surgeon is not scrubbed up or sterile, so if something goes wrong with the robot and they need to manually intervene with the operation to save the patient, they are not equipped to do this and cannot just jump off the controls of the robot and start manually operating on the patient; they would have to get scrubbed up first.

With this 3D shape haptic control system, the surgeon could stand next to the patient, scrubbed up, while using the haptic shapes to control a robot operating on the patient. That way, if anything goes wrong, the surgeon is ready to dive in and manually operate to fix whatever has gone wrong.

How does this apply to Depth Sensing Endoscopes?

All of the above techniques would rely on the depth sensing ability of endoscopes in the body. To make a 3D hologram, the holographic software would need to know how far away individual points are from the camera to give an accurate 3D representation. This is particularly true for advanced haptic feedback by ultrasound as well, as it would need to know exactly how an item is positioned in three dimensions to provide accurate haptic feedback to the surgeon. This project, using Kinect’s depth sensing and 3D abilities, will hopefully lay the groundwork for revolutionary surgical techniques such as this.

In The Very Long Term

“Very Long Term” means a long time in the future, unlikely to be witnessed in the next several decades.

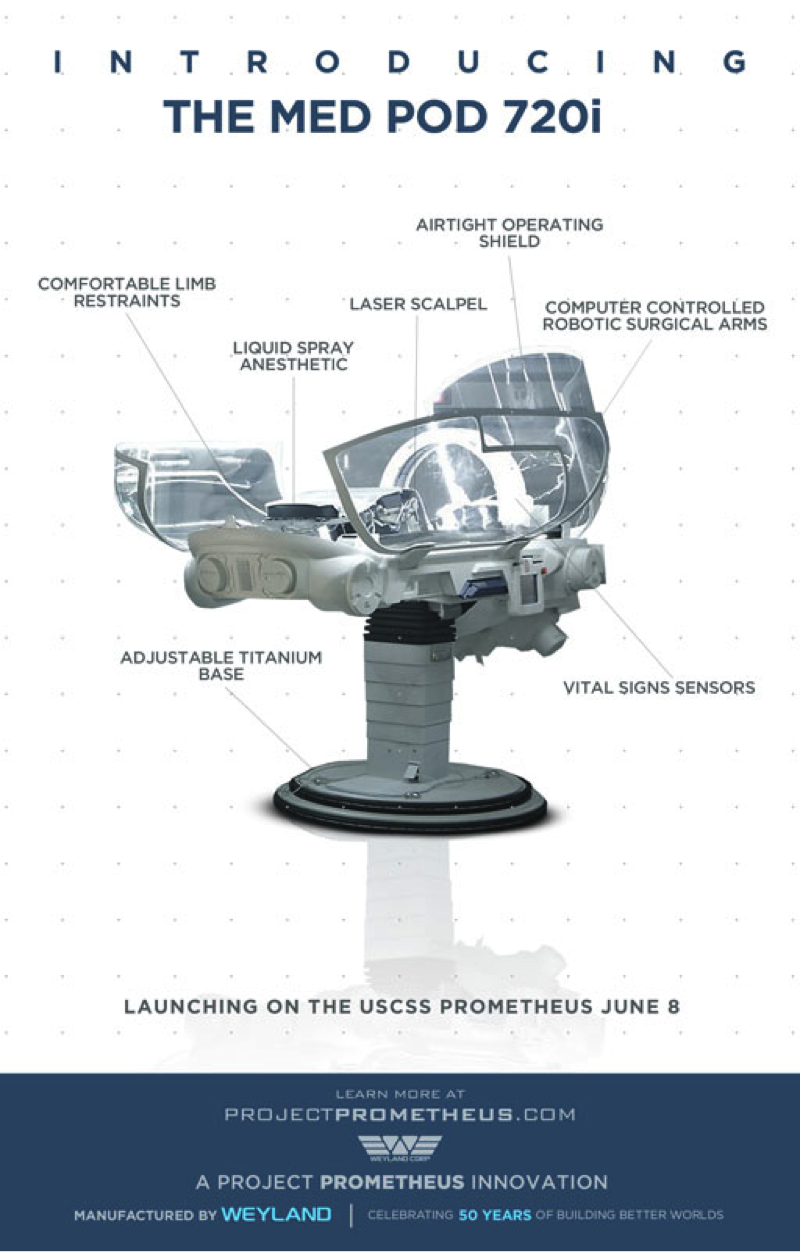

Autonomous Robotic Surgery

In the future, could surgery be completely autonomous? Could a robot perform any surgical operation, completely free of control by a surgeon? Potentially, robotic surgeons such as those in the film Prometheus could be created:

A robotic surgeon like this has full knowledge of human physiology, and can autonomously perform surgical operations.

Perhaps medical pods like this will become the norm in the future, capable of healing any ailment or performing any operation autonomously.